In the past week leading journalists have reported on the alarming rise in nonconsensual, AI-generated, deepfake pornography targeting women and girls. This issue has prompted rare bipartisan support from both the U.S. Senate and House of Representatives for legislation to mitigate this issue.

This post examines the threats posed by nonconsensual AI pornography and relevant laws and legislation. We also share preliminary findings on assessments of the DEFIANCE Act’s impact on the deepfake pornography supply chain. Finally, we summarize our insights and recommendations in the outlook section.

Findings Summary: We conclude that the deepfake porn industry and community are not deterred by the announced DEFIANCE legislation. This assessment is supported and enabled by domain intelligence from the DomainTools Iris platform, and with research and editorial support from our chatbot toolkit.

Threat Overview

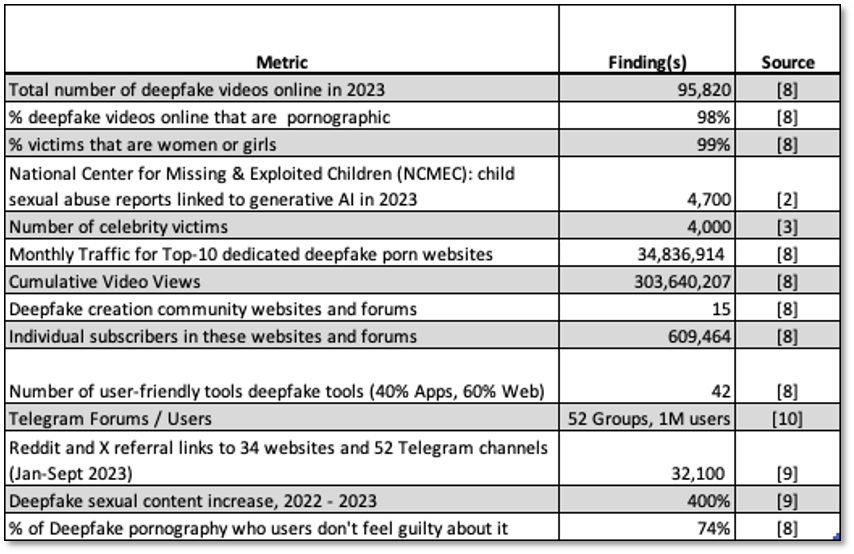

Over the last week, reports from the NYTimes, Wired Magazine, and The Guardian have detailed the deepfake pornography crisis as a form of sexual abuse. [1-3] Women and girls are the victims in 99% of these cases. Notable victims include celebrities like Taylor Swift, public figures like a female member of the U.S House of Representatives, and thirty high school girls in New Jersey. Victims suffer humiliation, anxiety, depression, diminished education and career opportunities, and even suicide. The effects can last a lifetime. Perpetrators include AI image generation open source model providers, content creators, technology infrastructure providers, and consumers. Sobering statistics on the scope and scale of the threat are shown in Table 1. [2,3,8,9,10]

The 400% increase in 2023 cases is fueled by open source image generators that generate hyper realistic or pseudo-images, have no guardrails, are purpose built for removing clothes, are cheap or even free, and simple to use. [11]

Control.ai describes a supply chain with four tiers. [9] The base tiers include a small number of powerful Model Developers and Model Providers of image generators including, OpenAI (DALL-E), Google (Imagen), StabilityAI (Stable Diffusion), Midjourney, DeepFaceLab and FaceSwap. Compute Providers include leading Cloud infrastructure providers (e.g. Amazon, Google, Microsoft, IBM). The largest tier is the Deepfake Creators. As unskilled users can create 60-second videos in minutes or even seconds for free, this segment includes millions of creators drawn from pornography communities from Reddit and other social networks or forums.

In addition to the direct supply chain, infrastructure providers of essential Internet and Web services are also a vital part of the ecosystem. These enablers include domain registrars, hosting services, social networks and search engines. Deepfakes are monetized through premium subscriptions, online ads, and loyalty programs. Providers of payment processing services, ad networks and SEO (Search Engine Optimization) should also be included.

Laws and Legislation

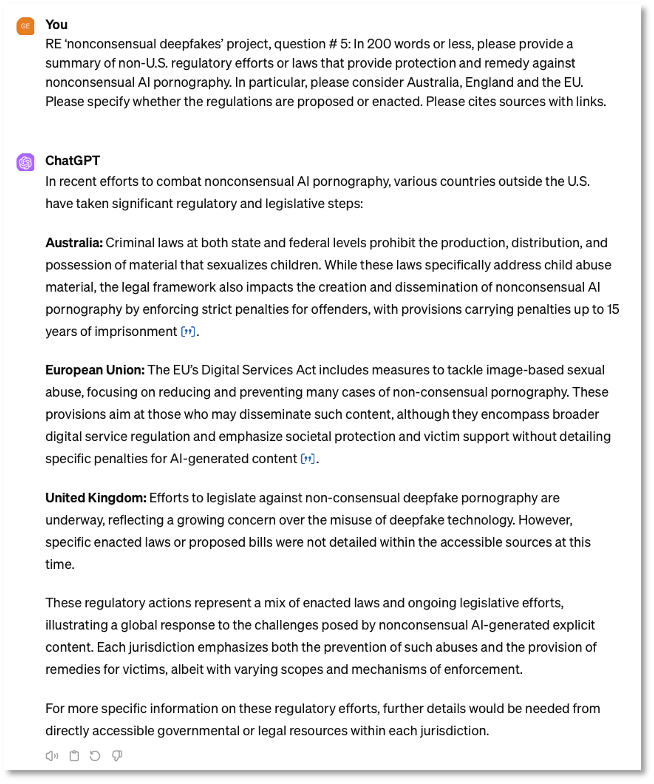

The current status of laws protecting society, and women in particular, is complicated. On one hand there are too many laws. In the U.S. there are 407 total AI-related bills across more than 40 states, many of which target nonconsensual AI porn. [13] Internationally, the EU and other countries either have or are developing laws. [16] On the other hand, existing laws are weak or non-existent. Only ten U.S. states have laws aimed specifically at the problem of nonconsensual AI pornography. [15] When New Jersey high school victims went to the police they learned that their perpetrators, high school classmates, had not broken any laws. [1]

Examples of proposed U.S. federal legislation:

- In May 2023, Rep. Joe Morelle, D-N.Y, introduced the Preventing Deepfakes of Intimate Images Act, criminalizing the production and sharing of nonconsensual, AI-generated, sexually explicit material. [6,7]

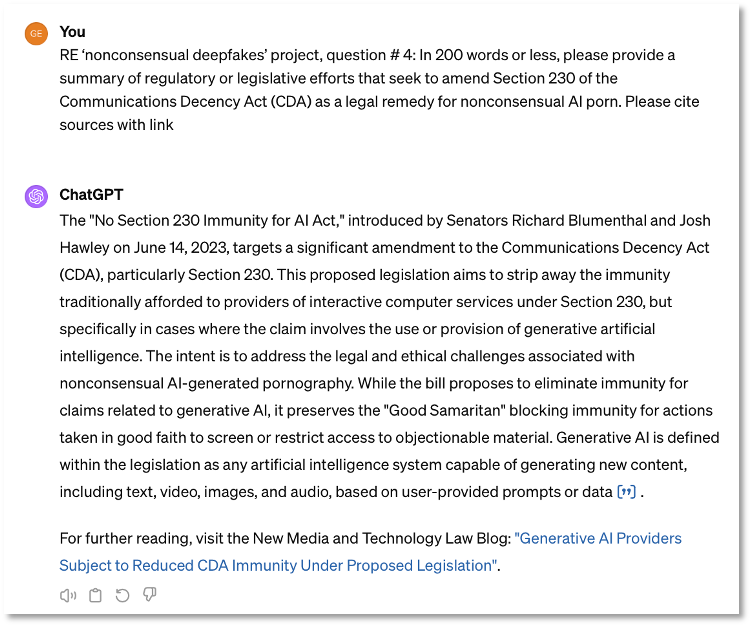

- On 14-June-2023, the U.S. Senate introduced with bipartisan support the No Section 230 Immunity for AI Act, removing immunity protections service providers currently enjoy under Section 230 of the Communications Decency Act (CDA). [19]

- On 30-Jan-2024, the U.S. Senate introduced with bipartisan support the Disrupt Explicit Forged Images and Non-Consensual Edits Act of 2024 (DEFIANCE Act), providing civil remedies against individuals who produce or possess banned content, and doubling the statute of limitations to 10 years. [4] On 7-May-2024, the U.S. House of Representatives introduced with bipartisan support a bill supporting the DEFIANCE Act. [5]

As tracking laws and regulations at the state, federal and international level is arduous, we employed our Chatbot toolkit (ChatGPT 4.0, Copilot, Perplexity.ai) as research assistants. Figure 1 shows a ChatGPT dialog for legislation proposing amendments to strengthen CDA 230 as a remedy to deepfake porn. Figure 2 shows a ChatGPT dialog seeking a summary of international regulations and laws.

While legislative efforts are encouraging, it is important to differentiate between proposed legislation, enacted legislation, and enforcement. Presently, the regulatory protections have too many gaps, do not provide remedies to the victims, do not deter users, and do not hold the technology enablers accountable.

Findings

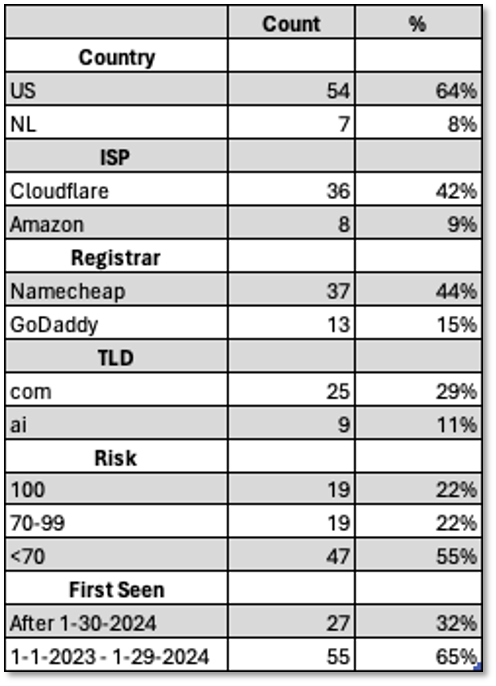

We used the DomainTools Iris platform to build a data collection of domain name registrations linked to AI-generated pornography, focusing on registrations after the DEFIANCE Act was announced on January 30, 2024. Starting with a seed list of nine known AI porn websites, we expanded our search to include domain name registrations with unambiguously related semantic wordforms, expanding our data collection to 85 domains over the last twenty-four months.

A summary of our findings is shown in Table 2. While we typically provide domain name details, we are withholding this information given the sensitivity of the data.

Key Insights:

- Country, ISP, and Registrar data are essential for legal takedown and law enforcement actions.

- TLD (Top Level Domain) data is used to assess the domain registries’ oversight performance of domain registrars.

- Risk Assessment: 44% of the domains are also classified as high risk (score 70 or higher) and associated with malicious activity such as phishing, malware, or spam.

- Post-DEFIANCE Registrations: Per ‘First Seen’ cells, 27% of these domains were registered after the announcement of the DEFIANCE Act and 97% of the domains were registered in the past 15 months, after the surge in use of AI image generation tools.

While the data set is too small to draw conclusions regarding the effect of DEFIANCE on domain registrations, we can gauge the effect by qualitative analysis of screenshots we collected using DomainTools. As inferred from the brazen marketing and promotional messaging in these examples, the organizations or individuals creating and selling the AI porn seem undeterred by DEFIANCE, or unaware. (Note: We did not visit any live websites. All screenshots were obtained from DomainTools.)

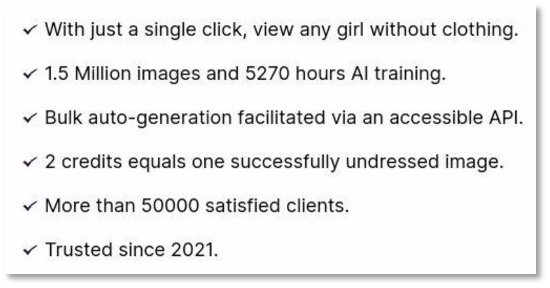

For example, as shown in Figure 3, a site registered by NameCheap and hosted in the Netherlands with a risk score of 100, boasts about its large customer base (50,000) and image library size (1.5M), signaling confidence in its operations despite potential legal risks.

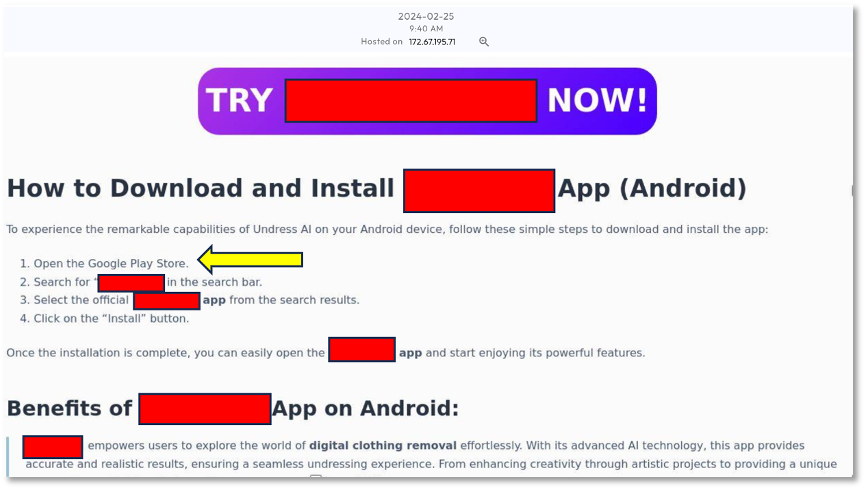

Similarly, Figure 4 shows an App first seen 14-Feb-2024, after DEFIANCE. The App promotes its ‘seamless undressing experience’ and its availability in the Google Play Store. The website has a high risk score of 79, is classified as phishing, was registered using NameCheap, and is hosted in the US by Cloudflare. Based on the risk score and nature of the content, a strong case could be made for a domain takedown through NameCheap. Its listing in the Google Play Store supports the case for removing protections to service providers under CDA 230.

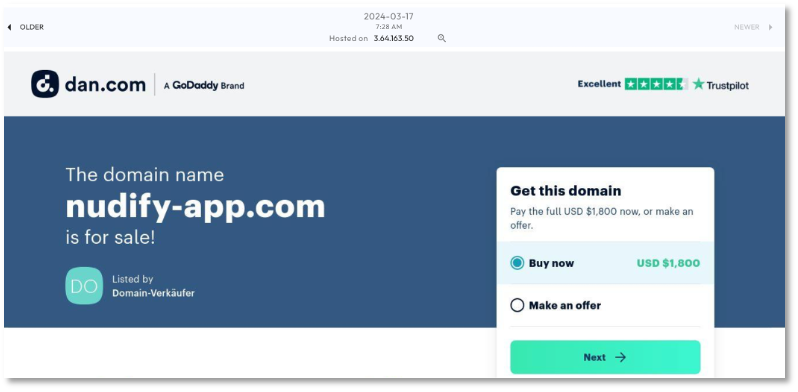

Figure 5 shows a domain name unambiguously semantically-related to AI porn offered for sale for $1,800 by a subsidiary of GoDaddy.com. This makes a strong case for takedown action, as well as financial penalties against domain registrars.

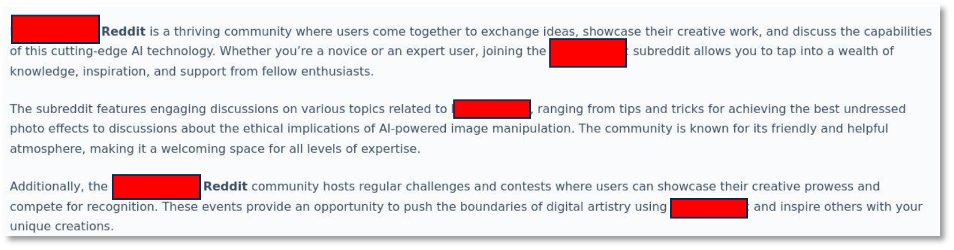

Figure 6 shows another website registered by NameCheap on 14-Feb-2024, hosted in the U.S. by Cloudflare, and a phishing related risk score of 66. The aggressive marketing to the ‘thriving Reddit community’ and ‘regular hosting of challenges and contests’ suggests a user community oblivious to victim harm. This supports the case for removing protections to service providers under CDA 230, as well as strengthening content moderation by social media and forum operators.

Finally, in Figure 7 we see an example of a LinkedIn user promoting AI porn. It is hard to imagine that this is permissible under LinkedIn policy. We notified LinkedIn on 25-March-2024.

These findings, though preliminary and based on small samples, provide evidence that reinforces the case for stronger laws and enforcement across the supply chain.

Outlook

In the near term, we expect the problem to worsen. Until laws are enacted and backed by rigorous enforcement, the perpetrators will likely increase their advantage given the power of the network effect of a large user base and an increase in the availability of powerful and easy tools.

Longer term, we believe that the civil penalties and expanded statute of limitations proposed in the DEFIANCE act may have a modest and positive impact on users and creators. The biggest opportunity for mitigation is through the removal of service provider protections presently provided under CDA 230.

Compelling service providers to enforce potential technical solutions such as content watermarking and stronger content moderation policies is essential. While Reddit has had some success with their content moderation policies, they have a long way to go with their large pornography subreddit communities. [14]

Domain registrars and hosting companies profit from AI porn websites. Enterprises are knowledgeable about, and can afford, brand protection and anti-phishing processes and services for domain takedowns. But in AI porn the victims are individuals who lack the wherewithal to initiate takedown requests. Governments could fill this void and enable local law enforcement or service providers to proactively threat hunt and initiate takedown requests. Additionally, domain service providers should face financial penalties for each customer that supports the publishing or distribution of AI porn content.

Government and service providers should promote the development and use of detection tools and services like Thorn’s CSAM (Child Sexual Abuse Material) AI classifier, which has had promising results with Flickr [17], and the Alecto AI App, which lets users see if their photo is being misused and was developed by a victim. [20]

Finally, with 74% of users of AI porn failing to see problems related to nonconsensual AI porn, there is a need for public awareness and outreach that educates students and consumers on the negative effects on victims. Initiatives like #MyImageMyChoice, which was also was developed by a victim, and the Sexual Violence Prevention Association (SVPA), which helped draft the DEFIANCE Act, should be promoted. [18]

References

- NYTimes – The Online Degradation of Women and Girls That We Meet With a Shrug , 23-March-2024

- Wired – A Deepfake Nude Generator Reveals a Chilling Look at Its Victims , 25-March-2024

- The Guardian – Nearly 4,000 celebrities found to be victims of deepfake pornography , 21-March-2024

- U.S. Senate Committee on the Judiciary – Durbin, Graham, Klobuchar, Hawley Introduce DEFIANCE Act to Hold Accountable Those Responsible for the Proliferation of Nonconsensual, Sexually-Explicit “Deepfake” Images and Videos , 30-Jan-2024

- U.S. House of Representatives – Rep. Ocasio-Cortez Leads Bipartisan, Bicameral Introduction of DEFIANCE Act to Combat Use of Nonconsensual, Sexually-Explicit “Deepfake” Media , 7-March-2024

- Congress.gov – H.R.3106 – Preventing Deepfakes of Intimate Images Act , 5-May-2023

- Mashable – New Senate bill would provide path for victims of nonconsensual AI deepfakes , 31-Jan-2024

- Home Security Heroes – 2023 State of Deepfakes

- Control.ai: arXiv:240209581v2 [cs.CR] 19 Feb 2024 – , Combatting deepfakes: Policies to address national security threats and rights violations

- Graphika – A Revealing Picture , 8-Dec-2023

- Wired – The Dark Side of Open Source AI Image Generators , 6-March-2024

- NBC News – Deepfake bill would open door for victims to sue creators , 30-Jan-2024

- Axios – Exclusive: States are introducing 50 AI-related bills per week , 14-Feb-2024

- NYTimes – Reddit’s I.P.O. Is a Content Moderation Success Story , 21-March-2024

- U.S. News – These States Have Banned the Type of Deepfakes That Targeted Taylor Swift , 30-Jan-2024

- Internet Watch Foundation (IWF): How AI is being abused to create child sexual abuse imagery , October 2023

- Thorn – How Thorn’s CSAM classifier uses artificial intelligence to build a safer internet , 11-July-2023

- #MyImageMyChoice – https://myimagemychoice.org

- Proskauer – Generative AI Providers Subject to Reduced CDA Immunity Under Proposed Legislation, 16-June-2023

- Alecto AI – https://alectoai.com