Like the weather, headlines and buzz change quickly in GenAI world. Consider. May 13 – OpenAI’s GPT-4o is announced to great acclaim. [1] May 14 – Google launches its ‘AI Overviews’ feature as a default setting in the U.S. version of its flagship Search product at its I/O Conference. [2] May 14 – our DomainTools monitors for risky new domain registrations associated with OpenAI spiked to nearly three-times the baseline volume. By May 23 OpenAI was fending off a storm of negative publicity highlighted by the ‘ScarJo’ incident, while Google was being ridiculed in the press and social media for its AI hallucinating bizarre and even dangerous recommendations. On a more serious note, the Stanford University HCAI (Human Centered AI) Institute reported a 17% hallucination error rate in a study of GenAI use for legal research.

Bottom line: PR disasters involving market leaders make for juicy headlines. If they are strong enough, they disrupt business practices, legal actions, and markets. While the ethical and accuracy issues behind the PR fiascos are serious, my sense is that both OpenAI and Google will just ride out the storm. As this is a blog about cybersecurity, we’re most interested in the potential threats from new AI-related domain registrations. New domain registrations are often a prelude to malicious activity, particularly phishing and spam. Cyber defenders and consumers should treat new domains referencing terms related to OpenAI as potential threats, per our DomainTools research findings.

PR Disasters

By 23-May, OpenAI was battling three major issues. The biggest issue was their unauthorized appropriation of Scarlett Johansson’s (ScarJo) voice in their product demo. The second issue involved high profile resignations and the disbandment of their ‘Superalignment team’ responsible for model safety. The third issue, reported in the MIT Review, was that the data used to train its tokenizer, which helps their model parse and process text, was polluted by Chinese spam websites. For details see References [3-9].

While OpenAI’s issues were about corporate and executive trust, Google’s issues were about product trust and wild hallucinations. Google found itself the butt of jokes by journalists and users on social media. [10-13] Some of these problems were attributed to questionable quality of sources like Reddit used in its LLMs and its inability to recognize and handle satire.

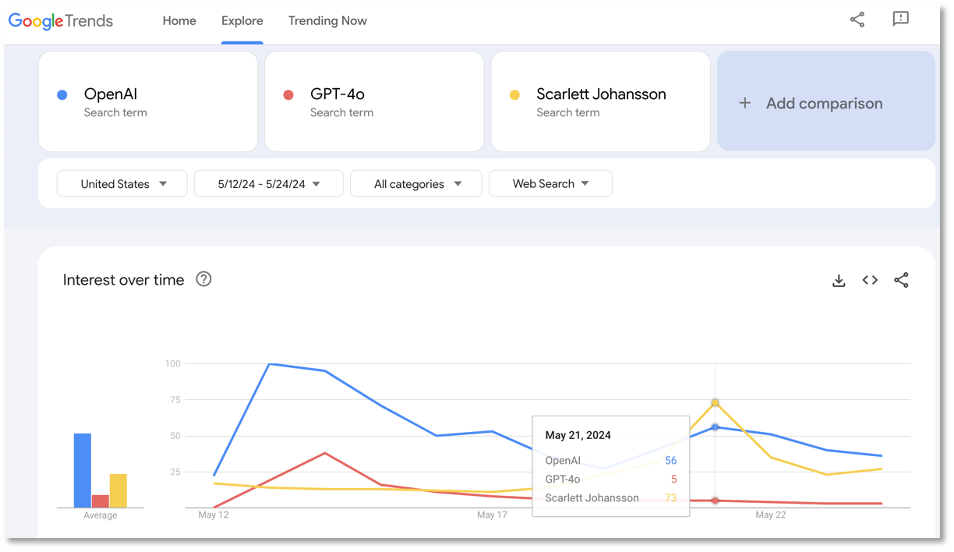

For a quantitative perspective Figure 1 shows Google Trends data for the terms ‘OpenAI’, ‘GPT-4o’, ‘Scarlett Johansson’, and ‘Google AI Overview’ from May 12 to May 24, 2024. Interest in ‘OpenAI’ and ‘GPT-4o’ surged on May 13-14. The term ‘Scarlett Johansson’ peaked on May 21, significantly outpacing the others. By May 23, ‘Google AI Overview’ was trending upward, surpassing ‘GPT-4o’.

Newly Registered Domains

The registration of new domains (NRDs) is a well-known precursor to phishing and other cyber threats. Attackers often use domain names that convey a lexical association with popular brands and trends to deceive users. In a recent review of AI-enabled cybercrime by MIT Technology Review, phishing tops the list of adversarial AI uses which includes content generation, malicious script generation, and language translation. [17] Earlier this year, both OpenAI and Microsoft reported the use of AI-enabled phishing campaigns by nation-state actors including Charcoal Typhoon, Salmon Typhoon, Crimson Sandstorm, Emerald Sleet, and Forest Blizzard. [18, 19]

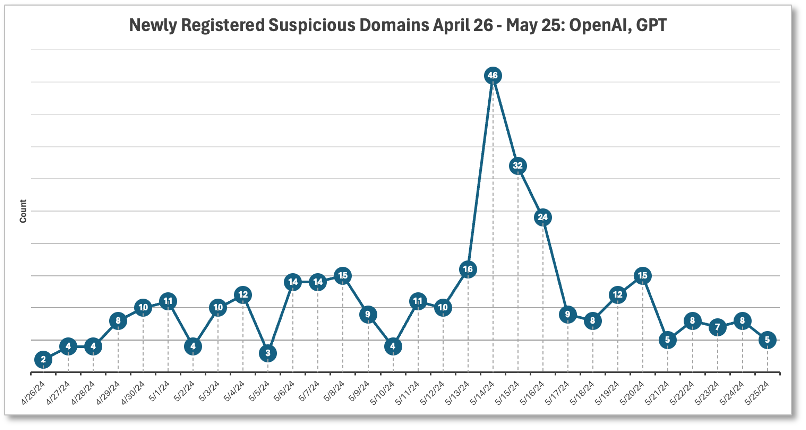

When I noticed that Google search results were promoting many domains from unknown sources for GPT-4o downloads, it seemed like a good time to update our DomainTools NRD filters. We adjusted our DomainTools filters to capture OpenAI’s new product names, specifically searching for domains registered within the last 30 days (April 26 to May 25, 2024), with high risk scores (95 or above), and containing terms such as OpenAI, ChatGPT, GPT-4, or GPT-4o. This query returned 340 high-risk domain registrations.

Figure 2 represents the distribution of these domains over the 30-day period, showing a baseline of 2 to 15 daily registrations with a significant spike during the GPT-4o announcement (May 13-16), when 31% (104) of the domains were registered.

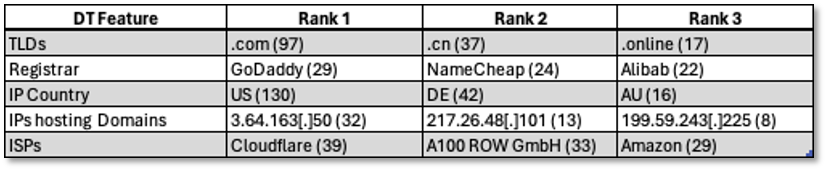

In Table 1 we see a summary of domain features that we derived from the DomainTools data based on human analyst review. Drilling down on the TLD (Top Level Domain) feature, surfaced more insights, such as ~ 15% of the registered domains originated from countries viewed as U.S. adversaries; China (CN), Russia (RU), and Iran (IR).

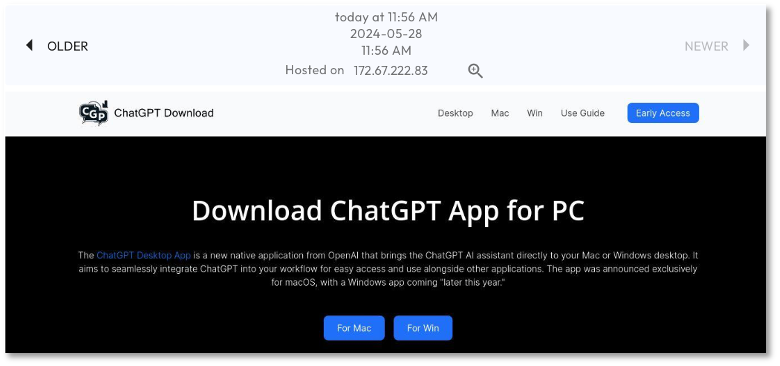

In addition to providing data to support NRD analysis, the DomainTools Iris platform also provides a user interface for analyst exploration, and an API to support automation and integration with other security tools. For example, in Figure 3 we see a screenshot of a suspicious download website. Key facts about chatgpt-download[.]com: first seen on 14-May- 2024, rated with a high risk score of 97, classified as a malware site, registered through the domain Registrar NameSilo, and hosted on IP address 172.67.222.83 by Cloudflare in the U.S.

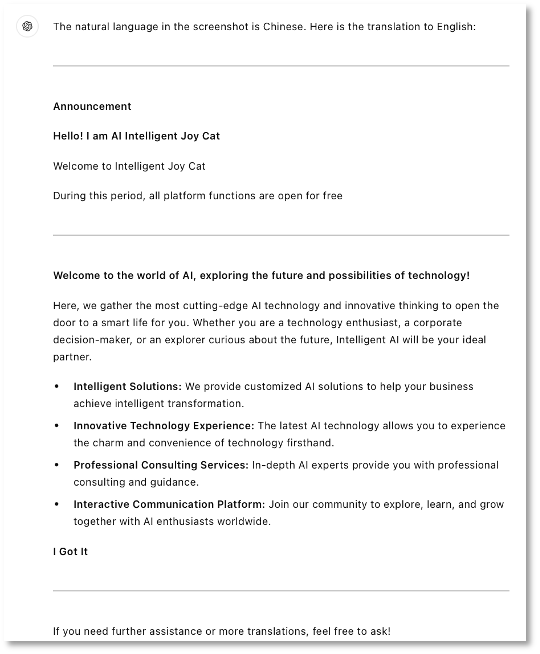

In Figures 4 and 5, we see examples of how analysts can use GPT-4o to provide translation assistance for investigations of domains and networks associated with adversary nations. The list below provides the domain and key features for 111chatgpt[.]cn:

- First seen: 5-21-2024

- DomainTools Risk Score: 96

- DomainTools Risk Score Threat Profile: Malware

- Registrar: 宁波万鼎电子商务有限公司

- ISP: Aliyun Computing Co. Ltd

- IP address: 47.98.209.57

- Host IP Country: CN

With this information, the analyst can use GPT-4o to translate the screenshot from DomainTools as shown by the Prompt in Figure 4 and the translation in Figure 5.

Outlook

The events we’ve described should spur OpenAI, Google and other leading GenAI LMM and solution providers to improve their data quality and models. But despite the gravity of the issues, we believe it will not significantly alter their behavior or impede GenAI adoption, employment or investment. As Google analyst Thomas Monteiro from Investing.com aptly, and perhaps recklessly, notes: “Companies need to move really fast, even if that includes skipping a few steps along the way. The user experience will just have to catch up.” [10] We’ve heard that before.

The Stanford HCAI (Human Centered AI) Institute recently published alarming findings on GenAI’s use in legal research, revealing a hallucination error rate of 17% (1 out 6 results are wrong) even with advanced measures like RAG (Retrieval Augmented Generation) implemented. This should be taken as a wake-up call for a more aggressive approach to mitigating inherent hallucination errors with better data quality for LLMs, transparent benchmark testing, regulations, and even litigation. [16]

Recent OpenAI and Google licensing deals with major publishers (News Corp, Associated Press, Politico, BuzzFeed, Axel Springer, the Financial Times) signal an encouraging shift towards addressing data and model quality issues. The outcome of litigation by publishers like NYTimes and Alden Global Capital, and authors like John Grisham, George R.R. Martin, Jodi Picoult against OpenAI and Microsoft may establish precedents for the responsibilities of AI developers and the rights of content creators. [14, 15] This dynamic business and legal climate suggests that while the technology accelerates, the frameworks around it – ethical, legal, and quality – struggle to keep pace.

Editor’s Notes:

- Credit to ChatGPT for valuable brainstorming, translation and proofreading assistance.

- Featured Image credit: The ONION, 23-May-2024: https://www.theonion.com/jerky-7-fingered-scarlett-johansson-appears-in-video-t-1851496932

References

- OpenAI.com – Introducing GPT-4o and more tools to ChatGPT free users , 13-May-2024

- TechCrunch – The top AI announcements from Google I/O , 15-May-2024

- Fortune – OpenAI’s week of chaos has reopened a festering wound at the $80 billion startup that was supposed to have healed , 23-May-2024

- Inc.com – Sam Altman Just Made the 1 Mistake No CEO Should Ever Make , 23-May-2024

- MIT Technology Review – OpenAI’s latest blunder shows the challenges facing Chinese AI models , 22-May-2024

- Mashable – What OpenAI’s Scarlett Johansson drama tells us about the future of AI , 25-May-2024

- The ONION – Jerky, 7-Fingered Scarlett Johansson Appears In Video To Express Full-Fledged Approval Of OpenAI , 23-May-2024

- BGR – 3 big ChatGPT developments that have made me lose trust in OpenAI , 25-May-2024

- NYTimes – ScarJo vs. ChatGPT, Neuralink’s First Patient Opens Up, and Microsoft’s A.I. PCs , 24-May-2024

- NYTimes – Google’s A.I. Search Errors Cause a Furor Online , 24-May-2024

- The Verge – Google promised a better search experience — now it’s telling us to put glue on our pizza , 23-May-2024

- VentureBeat – ‘Legitimately dangerous’: Google’s erroneous AI Overviews spark mockery, concern , 24-May-2024

- Futurism – Google’s AI Is Churning Out a Deluge of Completely Inaccurate, Totally Confident Garbage, 25-May-2024

- Semafor – AI companies freeze out partisan media , 26-May-2024

- TechCrunch – This Week in AI: OpenAI and publishers are partners of convenience , 25-May-2024

- Stanford HCAI (Human Centered Artificial Intelligence): AI on Trial: Legal Models Hallucinate in 1 out of 6 Queries , 23-May-2024

- MIT Technology Review, – Five ways criminals are using AI , 21-May-2024

- Microsoft Security – Staying ahead of threat actors in the age of AI , 14-Feb-2024

- OpenAI – Disrupting malicious uses of AI by state-affiliated threat actors , 14-Feb-2024