In the wake of two catastrophic hurricanes and with just two weeks until highly divisive U.S. elections, the U.S. faces unprecedented challenges. Millions have lost loved ones, homes, and livelihoods. Damage estimates range from $20 billion to over $250 billion. Relief efforts are being hindered by disinformation, conspiracy theories, and threats of violence targeting first responders, government officials, and aid workers. Disinformation from both foreign and domestic sources floods the internet, further destabilizing the situation. Amid this chaos, a silent majority may be holding on, hoping for stability to prevail over chaos.

In this report, we focus on the role of Generative AI in cybersecurity and introduce the ‘Breakout Scale’ as a tool to:

- Measure the impact of influence operations (IO)

- Assess domestic cases of influence operations

- Examine the role of foreign and domestic IO in the U.S. elections

- Defend against future threats

Bottom line: Many disinformation campaigns, whether foreign or domestic, are fueled by conspiracy theories and amplified by AI and social media. Some domestic cases have escalated to the highest levels on the ‘Breakout Scale,’ including Category Six: Advocacy of Political Violence and Category Five: Celebrity Endorsement. These conspiracies are promoted by U.S. politicians, PACs (Political Action Committees), social media influencers, media outlets, and tech platforms, all of whom profit from the chaos. Both the Kremlin and U.S. political campaigns appear to follow similar tactics, overwhelming fact-checkers, defenders, and the public by ‘flooding the zone with misinformation.’ To counter these AI-enabled threats, we need AI-enabled defenses, stronger fact-checking mechanisms, responsible journalism, public advocacy and education, and effective governance.

Breakout Scale

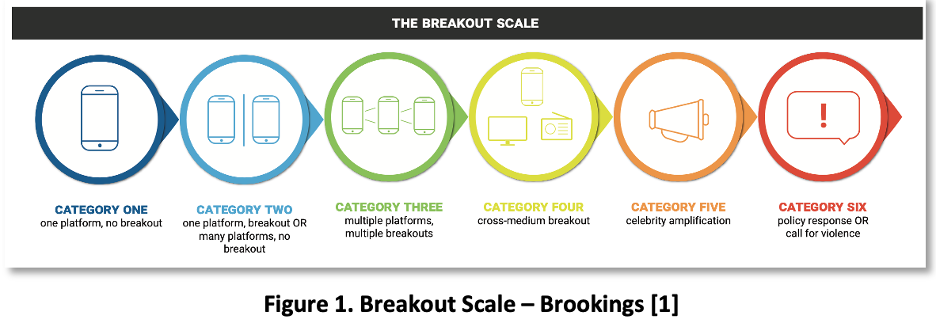

The Breakout Scale is a model used to categorize the impact of influence operations (IO) based on their spread and severity. Originally developed by Ben Nimmo in 2020 for Brookings, it was recently highlighted in a report by OpenAI, where Nimmo now works as a threat intelligence investigator. [2-4] As shown in Figure 1, the scale defines six categories, ranging from Category One (IO confined to a single platform) to the most severe, Category Six, where disinformation is amplified to the point of policy response or the advocacy of violence.

The highest-impact categories, Category Five and Category Six, represent significant escalations. In Category Five, disinformation spreads to multiple outlets, including mainstream media, and gains amplification from celebrities or politicians. Category Six is reserved for operations that result in a policy response or calls for violence, indicating the most serious consequences of influence operations.

On October 9, OpenAI published an “Influence and Cyber Operations” report, co-authored by Ben Nimmo and Michael Flossman. The 54-page report detailed more than 20 influence operations, some of which were previously reported by Meta and Microsoft, that leveraged ChatGPT but were disrupted by OpenAI. The report identified foreign influence operations by Russian, Iranian, and Chinese actors, including efforts to target the U.S. elections. These election-related operations were classified as low impact (Breakout Scale – Category Two). None of the campaigns in the report were rated higher than Category Four in terms of severity.

Domestic Disinformation

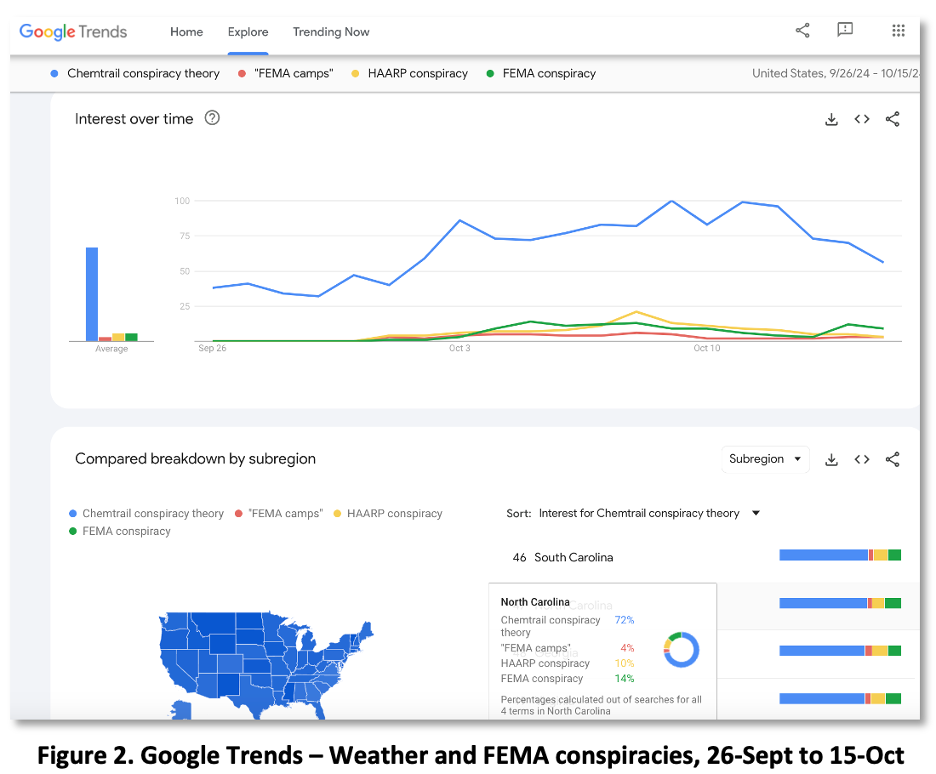

The flood of conspiracies sparked by Hurricanes Helene and Milton presents an opportunity for further research into the breakout impact of influence operations. [6-9] As part of our research planning, we used OpenAI’s GPT-4o to generate a list of related terms and phrases. We focused on two topics connected to weather manipulation and FEMA. For weather-related topics, we selected “Chemtrails conspiracy” and the “HAARP conspiracy” (High-frequency Active Auroral Research Program). For FEMA-related topics, we analyzed “FEMA camps” and “FEMA conspiracy.”

Figure 2 shows the results of our Google Trends analysis of these conspiracy topics from September 26 to October 15, a period corresponding to the hurricanes. The trend-line graph shows that interest in the “Chemtrails conspiracy” far exceeded that of other terms, followed by the “FEMA conspiracy.” The bottom portion of the figure shows the breakdown of interest by subregion, highlighting North Carolina as a swing state with significant Google search activity on these topics. Based on the Google Trends data and extensive media reporting, we concluded that these terms reached at least Breakout Scale – Category Five, indicating amplification by prominent figures and widespread media coverage.

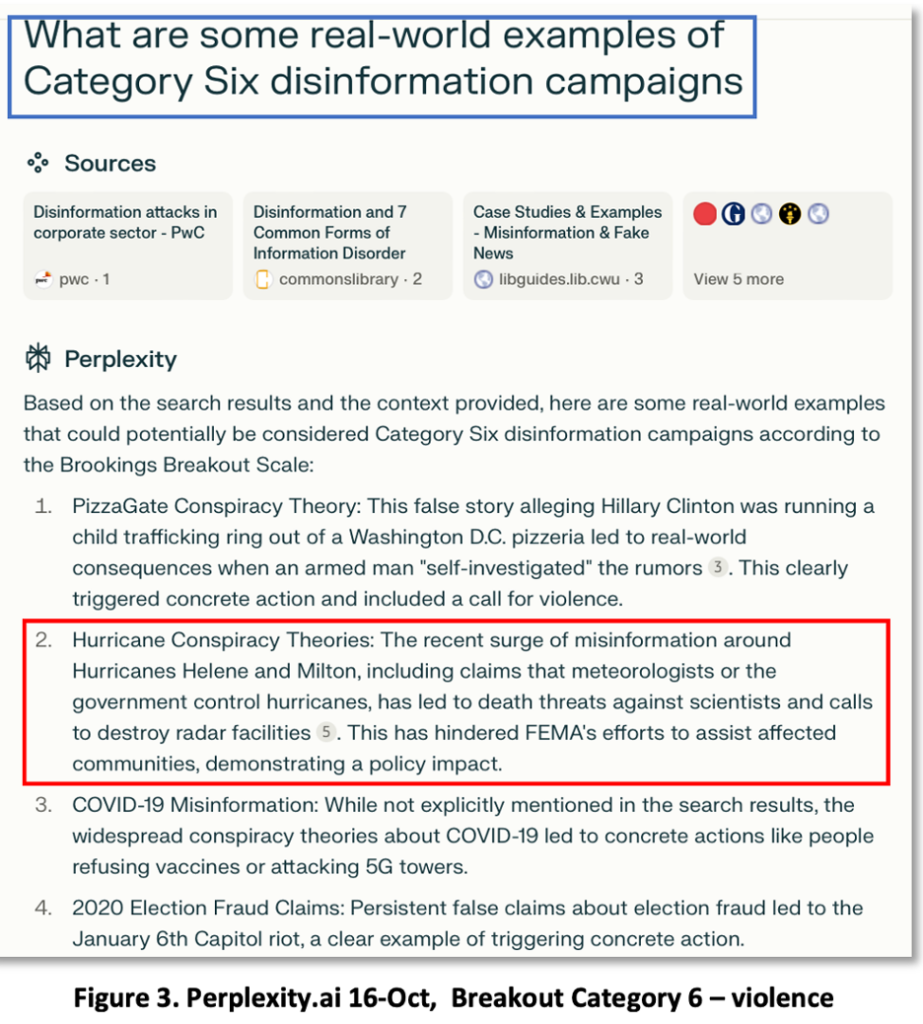

The next step in our research was to identify instances of Breakout Scale – Category Six, indicating a call for violence. To assist with this, we enlisted our chatbots as research tools. As shown in Figure 3 from Perplexity.ai, both the hurricane-related and FEMA-related conspiracies escalated to Category Six, with reports of death threats against meteorologists, FEMA workers, and first responders. These conspiracies were amplified by political figures and fueled concrete actions such as threats of violence, meeting the criteria for Category Six. Additional details are provided by Perplexity.ai and corroborated by the following sources [6-9].

Influence Ops on Elections

Foreign Influence Operations: In an Election Security Update issued 45 days before the U.S. elections, the Director of National Intelligence (DNI), representing the U.S. Intelligence Community (IC), reported that Russia, Iran, and China are all using Generative AI and manipulated media to amplify their influence operations. Each country is developing sophisticated models to create precision-targeted content on divisive issues tailored to specific audiences. Russia has been the most advanced, promoting the Republican party, while Iran supports the Democrats. Both countries target Spanish-speaking audiences through established media outlets, fake media, and social media channels.

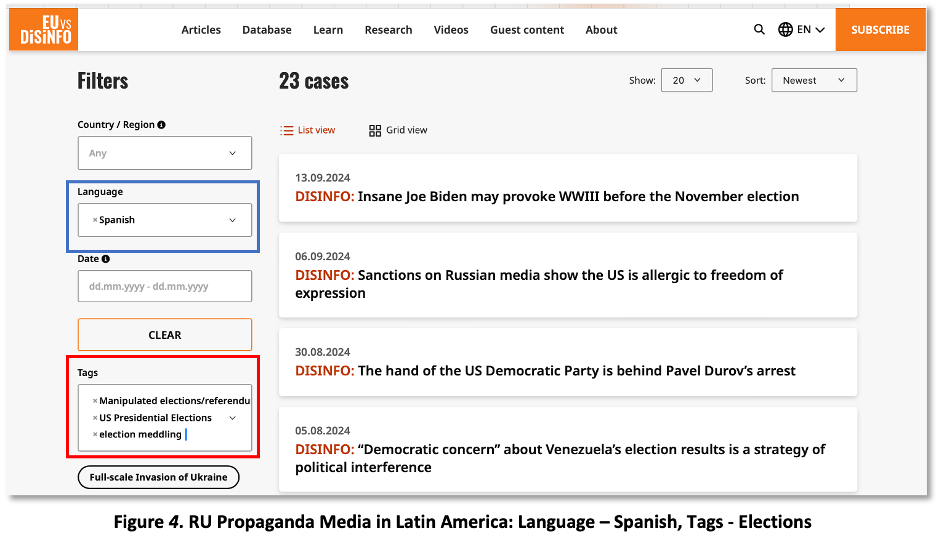

EUvsDiSiNFO, part of the EU’s East Stratcom Task Force, has operated an open-source database of Russian disinformation campaigns since 2015. The database now contains nearly 18,000 entries, each tagged with structured data on subjects, target countries, languages, media outlets, and dates. This structured data is a valuable resource for analysts and could be used to train machine learning models. Figure 4 provides an example of a database search for disinformation written in Spanish and tagged for election-related content.

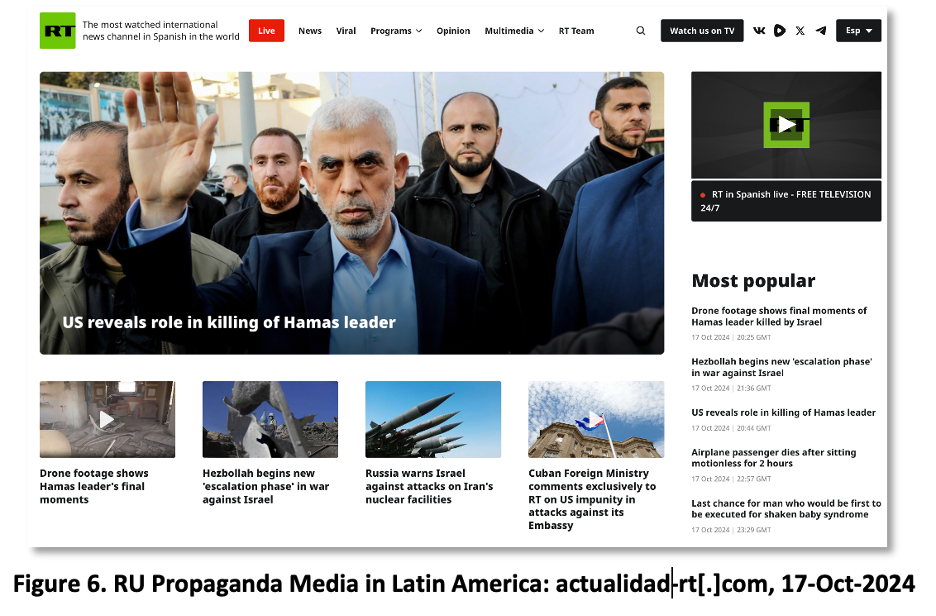

Russia, much like the U.S. during the Cold War with its Voice of America (VOA) and Radio Free Europe initiatives, has strategically invested in the Global South (Middle East, Africa, Latin America) to undermine the U.S. with narratives of imperialism and moral decay. Russian media is a dominant force in the Latin American media landscape. Russia Today (RT), one of Russia’s leading media companies, employs 200 Spanish-speaking workers in its Moscow headquarters and operates multiple brands (e.g., RT en Español, Actualidad RT, esrt[.]press), with offices in Caracas, Havana, and Buenos Aires. Today, RT’s Latin American operations are the strongest of its regional outlets, surpassing its English, Arabic, German, and French divisions [12-14].

Russia leverages its media investments in Latin America to precision-target disinformation toward Spanish-speaking communities in the U.S. Latino voting-rights groups have warned of disinformation circulating on platforms like TikTok and WhatsApp, promoting third-party candidates and spreading voter suppression messages (e.g., “November 5 election has been canceled”). False claims targeting Kamala Harris with labels like “communist” and “socialist” are also common [11].

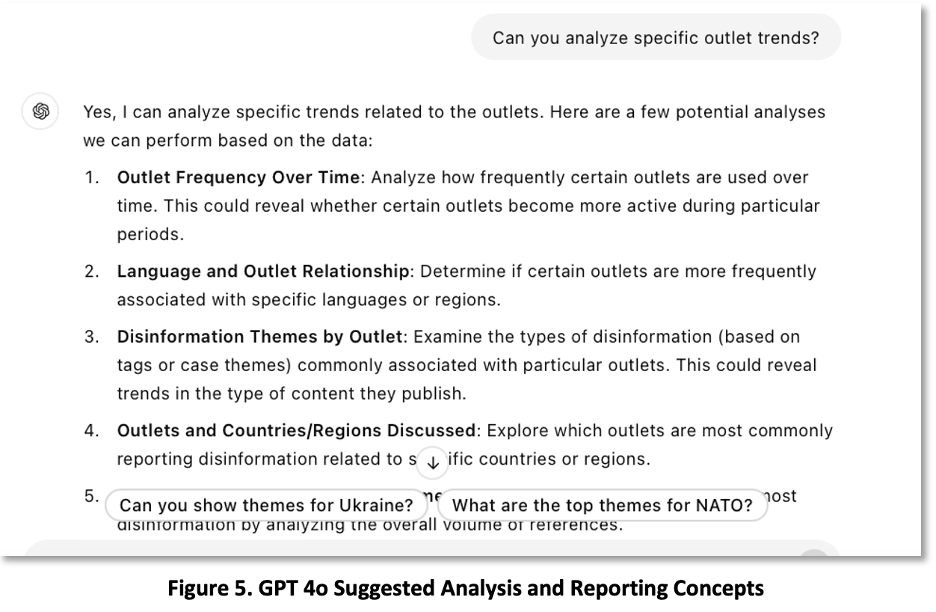

As Russian actors employ GenAI to boost their influence operations, U.S. defenders could likewise use GenAI to strengthen defenses. For instance, to better understand the media outlets Russia uses for its Spanish-language influence campaigns, we uploaded a CSV file containing tagged data from 30 Spanish-language election campaigns to GPT-4o and asked for analysis. Figure 5 shows GPT-4o’s recommendations for analyzing disinformation trends to counter these threats.

Figure 6 provides an English-language glimpse of one of RT’s Spanish-language websites, actualidad-rt[.]com. The site displays a clear anti-U.S., anti-Israel, pro-Russia, and pro-Hezbollah/Hamas bias.

Domestic Influence Operations: In the U.S., the Elon Musk-funded Future Coalition PAC is running an influence operation against Kamala Harris, micro-targeting Muslim and Jewish voters in swing states with diametrically opposed messages [15, 16]. As shown in Figure 7, the left panel portrays Harris as a supporter of Israel, targeting Muslim voters in Michigan with Snapchat ads, while the right panel presents Harris as supporting Palestine and Hamas, targeting Jewish voters in Pennsylvania, also via Snapchat.

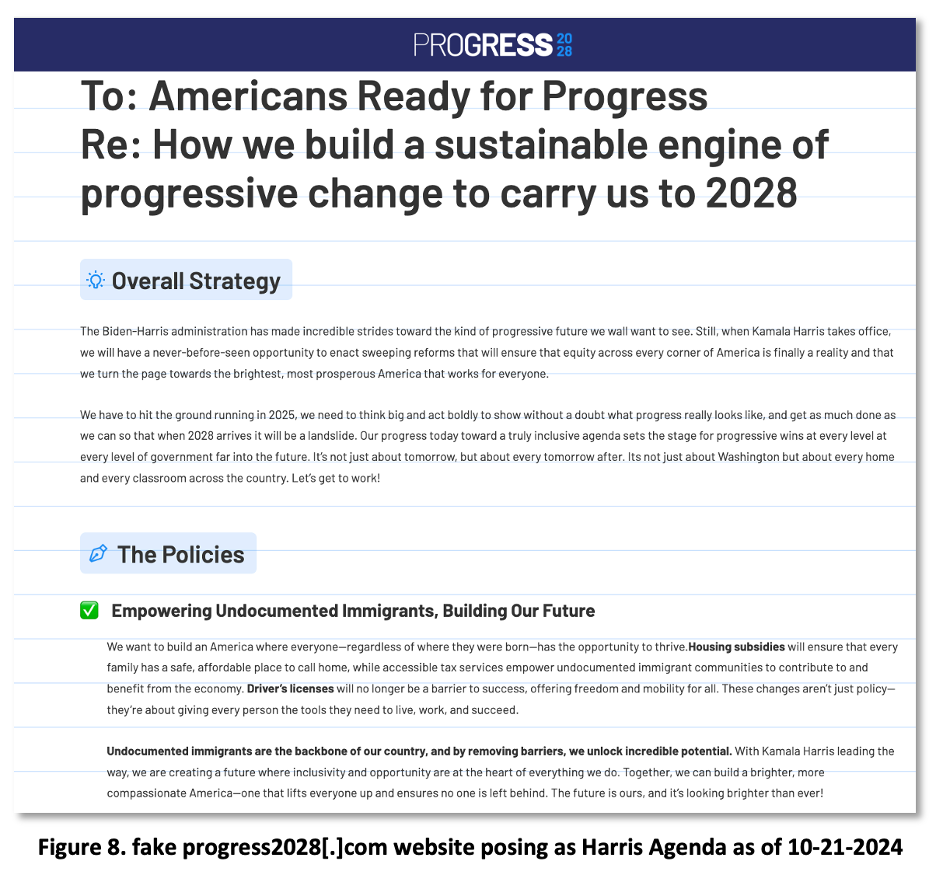

A related PAC, Building America’s Future, which is also backed by dark money from Musk and others, is micro-targeting Black voters with ads claiming Kamala Harris is attempting to ban menthol cigarettes (an estimated 81% of Black smokers prefer menthols). On September 26, the PAC registered a fake website, progress2028[.]com, which purports to represent the Democratic party’s political manifesto, as seen in Figure 8 [17]. The domain, registered through GoDaddy and hosted by Wpengine Inc. in Austin, Texas, is flagged as suspicious due to its recent registration, with a DomainTools risk score of 68 and a ‘threat verdict’ of ‘suspicious’ according to CrowdStrike’s Hybrid-Analysis Falcon Sandbox.

Outlook

AI-enabled, conspiracy-driven influence operations pose serious threats to national security and global stability. [18] Fortunately, elections have also demonstrated resilience in the face of these challenges. The accelerated adoption of AI-enabled solutions for cybersecurity, disinformation detection, and threat hunting, along with increased sharing of threat intelligence among governments, corporations, non-profits, and researchers, are critical components in the fight against these cyber and disinformation threats.

Databases such as EUvsDiSiNFO and the Misinformation Dashboard from the News Literacy Project [19] illustrate the importance of threat sharing. These platforms provide structured data that allows analysts to track and counter disinformation campaigns more effectively.

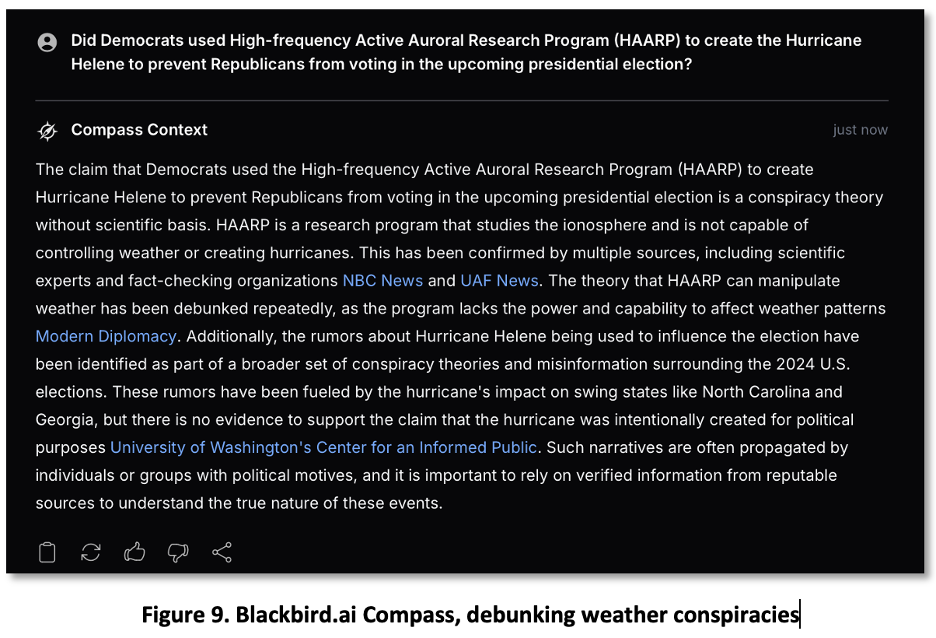

Generative AI language models and solutions providers also play a vital role in debunking disinformation. As shown in Figure 9, Blackbird.ai, a provider of narrative intelligence solutions, offers a simple, concise and effective example of how AI can counter conspiracy theories, such as those surrounding the HAARP weather modification myth.

Perhaps most important, strong governance, regulatory frameworks, and incentives are needed to combat the weaponization of information and cyberspace. Clear regulations can help limit the spread of disinformation, while incentives can encourage the development of innovative defensive technologies.

Editor’s Note:

- Credit to our chatbots for their valuable assistance with threat analysis research, proofreading, and editorial review.

References

- CheckFirst – Operation Overload, 6-May-2024

- Brookings, Ben Nimmo: Full Report – The Breakout Scale: Measuring the impact of influence operations – Full Report, September 2020

- The Washington Post – This threat hunter chases U.S. foes exploiting AI to sway the election, 13-Oct-024

- OpenAI – Influence and cyber operations: an update , 9-Oct-2024

- Meta – SECOND QUARTER Adversarial Threat Report , August 2024

- Media Matters for America – On TikTok, misinformation about Hurricane Helene has spurred calls for violence against FEMA personnel, 9-Oct-2024

- NYTimes – Meteorologists Face Harassment and Death Threats Amid Hurricane Disinformation , 14-Oct-2024

- NYTimes – Posts falsely blame HAARP research project for Hurricane Helene , 14-Oct-2024

- Grist – Fact-checking the viral conspiracies in the wake of Hurricane Helene, 4-Oct-2024

- DNI (Director of National Intelligence) – Foreign Actors Boosting Influence Operations with Generated and Manipulated Media, Mid-September 2024

- NYTimes – What Keeps Latino Voting-Rights Activists Up at Night? Disinformation , 17-Oct-2024

- Wilson Center – Latin America Loves Russia Today Publication , 18-July-2023

- The Atlantic Council – DFRL Lab: In Latin America, Russia’s ambassadors and state media tailor anti-Ukraine content to the local context , 29-Feb-2024

- Recorded Future News: Russian ‘influence-for-hire’ firms spread propaganda in Latin America: US State Department , 8-Nov-2023

- 404 Media – This Is Exactly How an Elon Musk-Funded PAC Is Microtargeting Muslims and Jews With Opposing Messages , 18-Oct-2024

- HuffPost – Elon Musk Helped Fund The Most Cynical Super PAC Of The 2024 Election, 16-Oct-2024

- Opensecrets.org – Pro-Trump dark money network tied to Elon Musk behind fake pro-Harris campaign scheme, 16-Oct-2024

- The Atlantic, By Charlie Warzel: I’m Running Out of Ways to Explain How Bad This Is 10-Oct-2024

- News Literacy Project Dashboard: https://misinfodashboard.newslit.org, 19-Aug-2024

- AFP Fact Check – https://factcheck.afp.com/doc.afp.com.36HN29E, 1-Oct-2024

- NYTimes – Meta and YouTube Crack Down on Russian Media Outlets , 17-Sept-2024

- NewsGuard – 2024 U.S. Election Misinformation Monitoring Center , last updated 15-Oct-2024

- GMF Alliance for Securing Democracy – The Russian Propaganda Nesting Doll: How RT is Layered Into the Digital Information Environment , May 2024