Trying to capture the signal from the noise in the tech echo chamber is not a new challenge. But when Sir Demis Hassabis, DeepMind Co-founder and Chief Executive of Google AI research, highlighted the pervasive hype and grift surrounding GenAI investing his comments seemed destined to reverberate. It was also reminiscent of the recent investment hype and fraud in crypto and a call to dig deeper into the subject of AI-related hype and investment scams. [1]

In this post, we share insights from our research into tools for measuring hype and some examples of two specific grifts related to this hype; the use of ‘AI Washing’ in the investment industry, and the impersonation of leading Generative AI (GenAI) brands by malicious cyber actors. We also share the methods and the tools we used – Google Trends, DomainTools, and our GenAI suite, including ChatGPT 4.0, Copilot, Perplexity.ai, and Claude. We conclude with a review and outlook on a broader set of AI-enabled cyber threats and issues.

AI Hype Measurements

Many followers of generative AI have an intuitive skepticism of AI-related investments. When industry leaders, like Sir Demis Hassabis, voiced similar skepticism it reinforces the intuition. Yet, in keeping with Cybercrank’s commitment to ‘data driven insights’, relying on intuition is not enough. We also need concrete data and real-world examples to support our views.

While there are many tools for measuring sentiment, Google Trends is a cost-free and easy-to-use tool that fits nicely in our research toolkit. [2] In Figure 1, we see comparative results for the last 30-days for four arbitrary terms: ‘AI Hype’ (blue), ‘AI Fraud’ (red), ‘AI Crime’ (yellow), and ‘AI Washing’. Google Trends provides a relative measure of search interest on a scale from 0 to 100, with the highest point of interest for a term within the specified time frame set at 100. As depicted in Figure 1, from the 6th of April, searches for ‘AI Hype’ began to climb, matching ‘AI Crime’ at a score of 37 by the 20th of April. This uptick, interestingly, occurred six days after Sir Demis’ critique appeared in The Financial Times. [1] This correlation, while not causative, suggests that expert commentary can have a significant influence on public discourse about AI.

AI Washing Findings

The Financial Times highlighted the massive investment in AI companies, with VCs pouring $42.5B into 2,500 AI start-ups in 2023. Such exuberance can fuel hype-driven market valuations and behaviors, prompting companies and speculators to exaggerate their capabilities, similar to the phenomena of greenwashing in environmental tech and cryptowashing in cryptocurrency. ‘Washing’ in these instances signifies a blend of hype, grift and fraud.

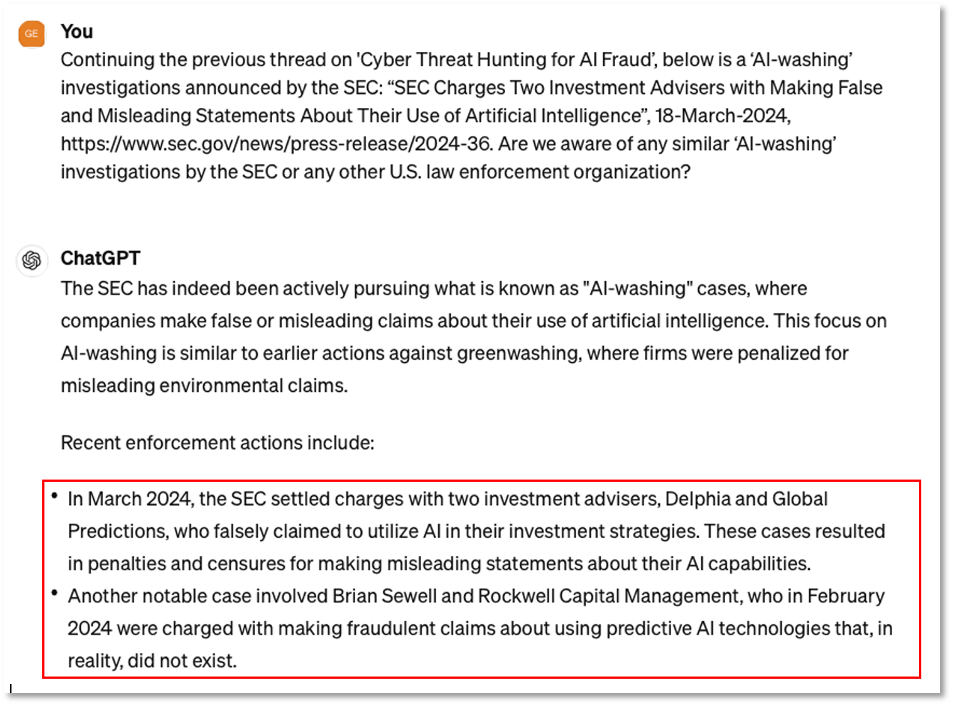

In 2024, we have already seen at least three SEC (Securities and Exchange Commission) enforcement actions for ‘AI Washing’. While we were alerted to the first two cases by our news feeds [3], ChatGPT 4.0 helped us identify an additional case of AI Washing as shown in Figure 2. [4]

AI Grift and Fraud in Investments

The Federal Trade Commission (FTC) provides a wealth of consumer data including an interactive Tableau-based data explorer on its Consumer Sentinel Data. Figure 3 shows a snapshot of this explorer, revealing a marked increase in investment-related fraud reports —surging to 25,791 in Q4 of 2023. [5] Perhaps as a response to this trend, in Feb 2024, the FTC proposed new protections to combat AI-facilitated impersonation fraud techniques like deepfakes, spoofed logos/seals, spoofed websites and email addresses, implied affiliation, and deceptive branding. [6]

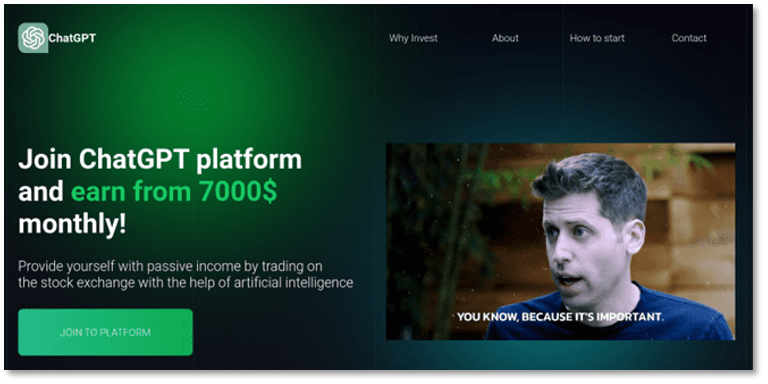

It is not hard to find OSINT (Open Source Intelligence) reports of ChatGPT and OpenAI themed investment scams. For example, the Netcraft report provides examples of scams impersonating ChatGPT and OpenAI featuring deepfake, spoofed logos, and implied affiliation or endorsements. [7]. These methods are the precise fraudulent methods that the FTC’s new measures seek to combat.

AI Brand Impersonation

Brand impersonation has long been a favored attack method of scammers, fraudsters and phishers. With AI companies dominating the news and social media discussions, the brands, logos and domains of the leading AI tech companies are attractive targets for fraudsters. To get a sense of this activity level, we used the DomainTools Iris platform to research new domain registrations for leading AI brands.

Our initial filter for AI-themed domains registered in the last year with activity and a risk score of 80 or more yielded nearly 10,000 high-risk domains. To tailor our data set for this blog, we narrowed the parameters to include only active domains related to ChatGPT and OpenAI, registered in the past 90 days, with risk scores of 95 or above. This filtering resulted in a list of approximately 500 domains. Each domain in this subset either impersonates or suggests an affiliation with OpenAI and ChatGPT and is classified by DomainTools as high-risk.

Figure 5 shows a parked domain, ChatGPTLogin[.]xyz, which is available for sale at a premium price by a subsidiary of the GoDaddy, the largest Domain Registrar. This domain name is problematic for several reasons. First, the naming convention implies an affiliation with the ChatGPT. And second, the use of ‘login’ within the domain suggests potential use for credential phishing or other malicious activities. Perhaps the biggest problem with such parked domains, of which we found many, is that leading registrars like GoDaddy facilitate, promote, and profit from the sale of sketchy domain names.

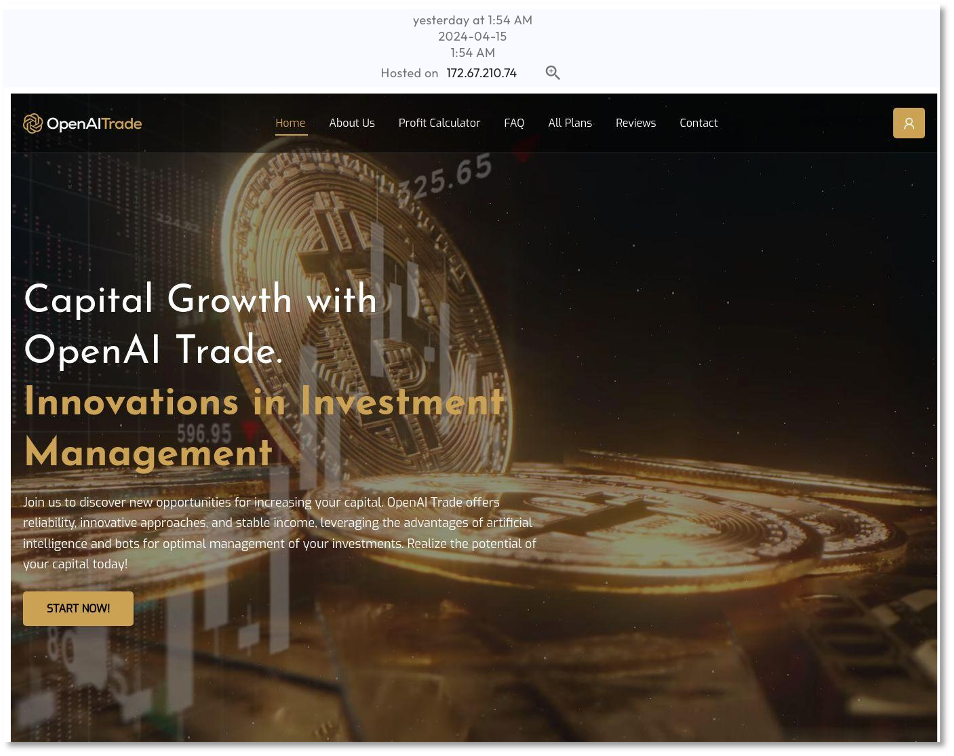

Figure 6 shows that the domain name openai-trade[.]io is an active site that implies a relationship with Open AI, violates the OpenAI trademarked name and logo, and appears to be an investment scam or fraud. The domain was registered through a Finnish registrar (Sarek Oy), was first seen 6-March-2024, resolves to IP 104[.]21.61.124, is hosted by Cloudflare in the U.S., and has a DomainTools risk score of 100.

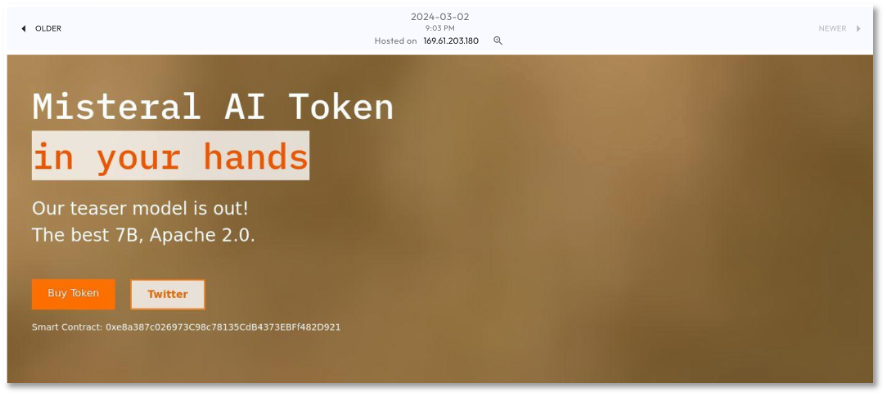

Finally, in Figure 7 the domain name, mistraltoken[.]ai, appears to be impersonating the French AI company, Mistral. The landing page color scheme and template match the legitimate domain name of mistral[.]ai, and the term on the impersonation site ‘Misteral’ does not match ‘Mistral’ and appears to be a typo. This domain has a DomainTools risk score of 100, was registered through the Domain Registrar, Name.com Inc, resolves to 169[.]61.203.180, and is hosted by AS36351 SoftLayer Technologies in the U.S.

Review and Outlook

The evidence gathered in our investigation confirms the suspicions and expert analyses we’ve observed: that AI-themed hype, grift, and fraud incidents are increasing in number and expanding in their sophistication. Unfortunately, when we expand the scope of our analysis to include other types of crime and threats, we conclude that this limited-scope analysis likely understates the breadth and complexity of problems related to AI-facilitated fraud.

Areas beyond the scope of our current analysis that warrant attention include:

- Strengthening government oversight over critical internet infrastructure providers like domain registrars and hosting services to enable takedowns and other enforcement actions

- The ethical and legal implications of explicit, non-consensual deepfakes [8]

- The weaponization of AI in spreading disinformation with serious repercussions for elections and public health

- AI-enhanced phishing and malvertising techniques [9-11]

- The emergence of Adversarial AI, which gives malicious actors significantly more powerful capabilities. [12-15]

To confront these challenges, cyber defenders need to embrace AI as way to raise their skill level and the resilience of our digital infrastructure. In addition, stronger regulations and legal authorities are needed to preempt and counteract the misuse of AI. The AI arms race in cybersecurity is here and will continue.

Editor’s Notes:

- Credit to ChatGPT for valuable proofreading assistance.

- The Featured Image for this post was sourced using Google Image search from Indian Cyber Security Solutions (GreenFellow IT Security Solutions Pvt Ltd on LinkedIn. The source contains two user comments: 1) “The article effectively communicates the gravity of these developments and the need for organizations to fortify their cybersecurity defenses against evolving ransomware strategies.” 2) “The shift towards data leaks and multi-variant attacks, combined with the use of wipers to destroy data, reflects an alarming escalation in the threat landscape”. My human intuition tells me both of these are examples of ‘comment spam’, a recognized and growing problem on LinkedIn. To get a second opinion, I figured I would have some fun and consult my Chatbot suite. In essence, Perplexity, Claude and Copilot all gave lightly qualified, ‘good possibility’ for their assessment, while ChatGPT gave a more cautious, ‘maybe’. What do you think?

References

- The Financial Times – Huge AI funding leads to hype and ‘grifting’, warns DeepMind’s Demis Hassabis , 31-March-2024

- Google Trends – Understanding the Data , as of 20-April-2024

- U.S. Securities & Exchange Commission – SEC Charges Two Investment Advisers with Making False and Misleading Statements About Their Use of Artificial Intelligence , 18-March-2024

- Securities and Exchange Commission v. Brian Sewell and Rockwell Capital Management LLC, No. 1:24-cv-00137 (D. Del. filed February 2, 2024) , 2-Feb-2024

- Federal Trade Commission (FTC) – FTC Consumer Sentinel Network Data Explorer

- Federal Trade Commission (FTC) – FTC Proposes New Protections to Combat AI Impersonation of Individuals , 15-Feb-2024

- Netcraft – The AI Gold Rush: ChatGPT and OpenAI targeted in AI-themed investment scams , 10-April-2024

- Cybercrank – DEFIANCE and the Grim Reality of Explicit Deepfakes , 28-March=2024

- Trustwave – The Inevitable Threat: AI-Generated Email Attacks Delivered to Mailboxes , 19-March-2024

- Mandiant Blog – Opening a Can of Whoop Ads: Detecting and Disrupting a Malvertising Campaign Distributing Backdoors , 14-Dec-2023

- The Hacker News – Malvertisers Using Google Ads to Target Users Searching for Popular Software , 20-Oct-2023

- Darktrace – Navigating a New Threat Landscape: Breaking Down the AI Kill Chain , 6-Sept-2023

- Cyberint – A.I – Trick or T(h)reat? , 4-Oct-2023

- KrebsonSecurity.com – Meet the Brains Behind the Malware-Friendly AI Chat Service ‘WormGPT’ , 8-Aug-2023

- Deloitte Cyber Threat Intelligence – How threat actors are leveraging Artificial Intelligence (AI) technology to conduct sophisticated attacks , March 2024