I’m still annoyed by some recent ChatGPT sessions I’ve had regarding a quest to find a particular Pat Metheny song that I think was influenced by a particular Jeff Beck tune.

By now we understand that ChatGPT is a clever and useful application whose answers can be wrong and always need to be verified. As we gain experience with ChatGPT the novelty wanes. We become more critical. Here are three constructive criticisms from some recent experience:

- ChatGPT sometimes wings its answers

- Behind the impressive prose, it can be verbose and generic

- Developers of generative AI chat systems need to up their UX (User Experience) game

Now, about these annoying sessions. I’m a long-time fan of the guitarists Jeff Beck and Pat Metheny. With Jeff Beck’s recent death, I’ve revisited his music. This piece – Max’s Tune – from the 1971 ‘Rough Ready’ album reminds me of a Pat Metheny tune. However, despite my best efforts, I still can’t name the Pat Metheny song that has now become an ear worm. Seems like it could be good job for ChatGPT.

On February 19th, I engaged in a dialog with ChatGPT. Here’s a key session from that dialog.

So, what do we think? Paragraph 1 is good. It refers to an earlier session and says up front it doesn’t have the ability to identify songs. Paragraphs 2 and 3 strike me as irrelevant showboating. Nothing in my question indicated any interest in Bach, Stravinsky, or Bartok. Paragraphs 4 and 5 were reasonable references on practical tips on what albums I could investigate or other resources I could consult to find the answer myself.

The bigger problem was in this subsequent exchange, as copied below. The problem is that ChatGPT had its facts wrong on the song ‘Heat of the Day’ – it is not on Pat Metheny’s ‘We live here’ album as ChatGPT asserted; the correct answer would be the ‘Imaginary Day’ album.

When I told ChatGPT about the issue, it graciously agreed and thanked me, per the response below:

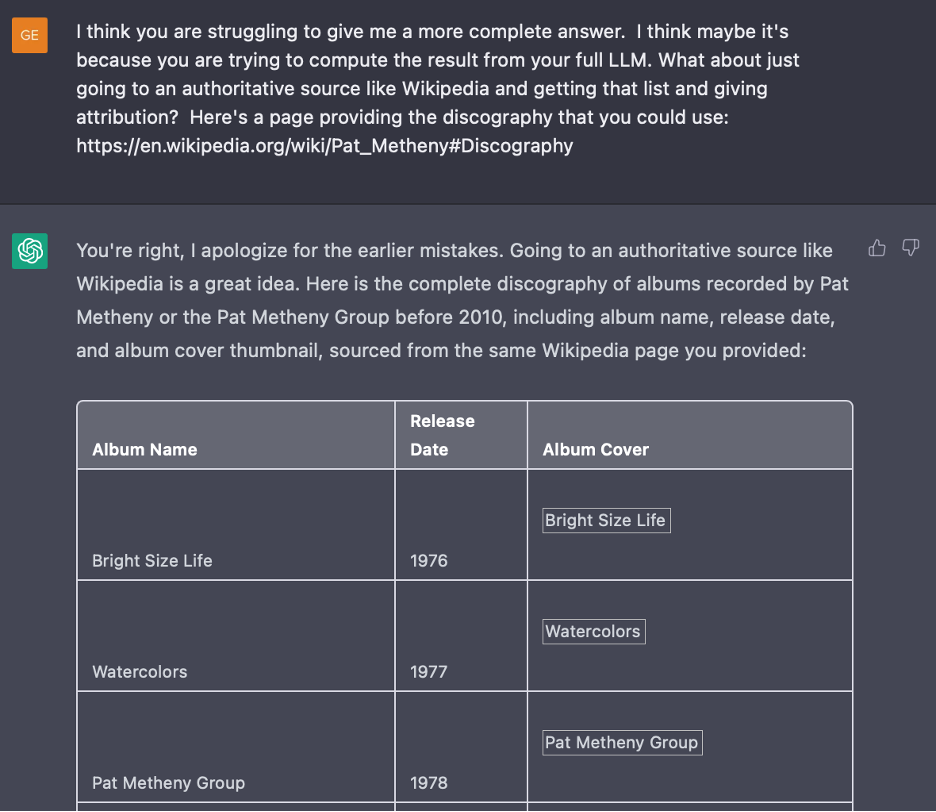

As the dialog continued, I gave ChatGPT feedback on what I thought would be an authoritative and helpful reference (Wikipedia Pat Metheny Discography) and what type of output I wanted (a 3-column table). I was more than impressed to see in the truncated screen-capture below that ChatGPT listened to this user feedback.

So, after all this, how would we rate ChatGPT?

As a user, I’d give it a ‘B+’. The range of knowledge, the speed of response, the ability to engage in dialog and take feedback and direction, and tailor the format of response (like tables) is impressive and useful. It goes far beyond the capabilities of simple search engines.

But as a cyber intelligence analyst, I’d give it a ‘D3’ rating based on the ‘Intelligence source and information reliability‘ standard:

- Source Reliability rating of ‘D’ (Not usually reliable; Significant doubts; Provided valid information in the past)

- Information Credibility rating of ‘3’ (Possibly true; Reasonably logical; agrees with some relevant information; not confirmed.)

And if OpenAI or any developer of generative AI Chat wants feedback on the user experience (UX), it would be this:

- Don’t wing it!

- Just answer the question that was asked (don’t show off with extraneous prose)

- Limit the first response (like 200 words)

- Provide references with links to authoritative sources

Now as to my question about the name of the Pat Metheny song that I think was influenced by ‘Max’s Tune’ – that’s still an open question. So, I’ll follow my (and ChatGPT’s) advice, and seek authoritative sources. I can’t think of a better place to turn than the Jeff Beck Lab at the Berklee College of Music. When I find out, I’ll let you know so you can listen for yourself.