This past week (May 13 to 19) was a momentous news week in GenAI World with the dueling multi-modal AI product announcements from OpenAI and Google. Less dramatic, but still noteworthy were reports of macro and microeconomic impacts on jobs and skills, and some promising GenAI wins for education and training. In this post we’ll review some of the highlights. And in keeping with our focus on cybersecurity, we’ll conclude with uses and impacts for cybersecurity.

Bottom line: While these multi-modal GenAI announcements are a big advance, and may even seem magical, the underlying and persistent trust and reliability issues related to GenAI hallucinations remain a serious concern that must be managed. The use of GenAI for education and cybersecurity training can help humans manage these AI assistants. Multi-model GenAI may be a marvel, but it is not magic.

OpenAI and Google Announcements

A balanced framing of the announcements might present the news as a ‘battle for AI supremacy between OpenAI and Google’. [1] In my personal (and biased) opinion, OpenAI generated the most media coverage and buzz. Rather than take my opinion, take 20-minutes and watch OpenAI’s launch video yourself. [2] And if you want some media reviews, I’d recommend [4,5], or use your preferred sources. My immediate reaction was that the OpenAI announcement was the most significant tech announcement since Steven Jobs’ iconic demonstration of the iPhone at MacWorld in 2007.[3] For Google’s announcements see [6,7].

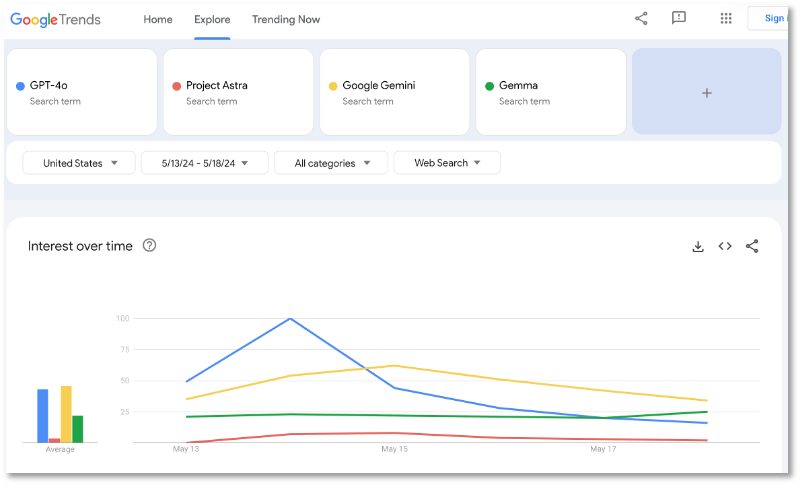

If you want numbers on relative messaging impact, the Google Trends graph in Figure 1 shows that OpenAI’s GPT-4o announcement dominated search activity through the 5-15 to 5-18 period, with Google Gemini gaining later in the week. One indicator of possible confusion regarding Google’s ‘muddled messaging’ was that it involved three different products/projects (Project Astra, Google Gemini, Gemma) vs a single product from OpenAI (GPT-4o). [8]

For both OpenAI and Google, dominant themes included multi-modal (native processing of text, images, audio, and video inputs and outputs), low-latency (fast), and ‘emotionally expressive or intelligent’ (human-like) chatbots. If you want some adjectives to describe OpenAI’s GPT-4o demo, try: beguiling, astonishing, ‘flirty’, ‘icky’, emotive, engagement-seeking, unreliable, improved, and useful.

While much of the buzz was marketing hype, there were important reminders that hallucination issues, which are a feature and not a bug of GenAI systems, persist and require human verification to ‘check important info’. [11]

Jobs and Skills

On the macroeconomic front, Kristalina Georgieva, Managing Director of the IMF (International Monetary Fund) warned of a potential AI ‘tsunami’ effect, estimating that 40% of jobs worldwide could be impacted, with as much as 60% in advanced economies. The World Economic Forum provides a more optimistic estimate of net job increase 12 million jobs, with 97 million new jobs created and 85 million jobs eliminated. [12]

In the financial services sector BlackRock and Goldman Sachs, two of the most powerful and influential financial services companies, shared how GenAI is impacting their hiring profile for analysts. Both leaders have noted that for many positions, GenAI requires less technical and more creative analysts with skills in critical thinking, logic and rhetoric; skills typically associated with liberal arts educational backgrounds. [13,14]

This need for workers with critical thinking skills and experiential knowledge is even acknowledged within the AI community. Perplexity CEO Aravind Srinivas, acknowledges that few people “really understand even prompt engineering, making these models do what you want them to do, making it do it reliably at scale.… This requires a lot of tacit knowledge that doesn’t exist in the … wider engineering community right now.” [15]

GenAI-enabled tutoring is showing promise for both students and workers. In fields with labor shortages, like cybersecurity, this represents an effective method of training new employees and ‘upskilling’ more experienced workers. [16,17]

GenAI Use Cases in Cyber Security

Since November 2022 we’ve been using GenAI for cyber intelligence analysis and have demonstrated use-cases in our blog. We have been managing a suite of four chatbots – GPT-4, Perplexity.ai, Claude, Copilot – and have written thousands of prompts and captured the dialogs in a small corpus. This practical experience has enabled us to compare the various chatbots and provided us with an appreciation for the capabilities and limitations of GenAI in the cybersecurity domain.

Our work has informed our understanding that effective prompts are often the result of logical iterations in dialogs guided by users with experiential knowledge of specific and narrow subdomains, e.g. cybersecurity operations, cyber threat intelligence, incident response, compliance, third-party risk, etc. Once the effectiveness of a prompt has been demonstrated, it can be labeled and added to the library for re-use.

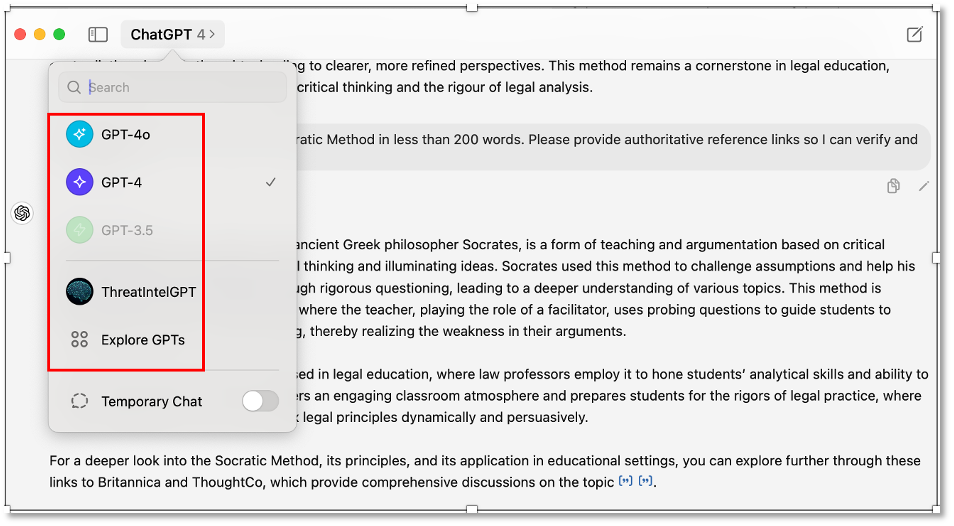

Following the recent launch of ChatGPT Desktop App for Mac, we added GPT-4o to our chatbot suite. And through the OpenAI Store we also added a domain-specific GPT, ThreatIntelGPT, as shown in Figure 2.

In the following sections we’ll demonstrate GenAI’s practical applications in cybersecurity through three key use cases: as a coding assistant, an analyst assistant, and a CISO assistant. Our experience is generally consistent with the findings described by CSO, which noted that while GenAI is not a ‘magic bullet’, it is a useful tool for tasks like interpreting and explaining code, managing alerts, reviewing compliance, assisting in report preparation, and developing personalized security training. [18]

Coding Assistant

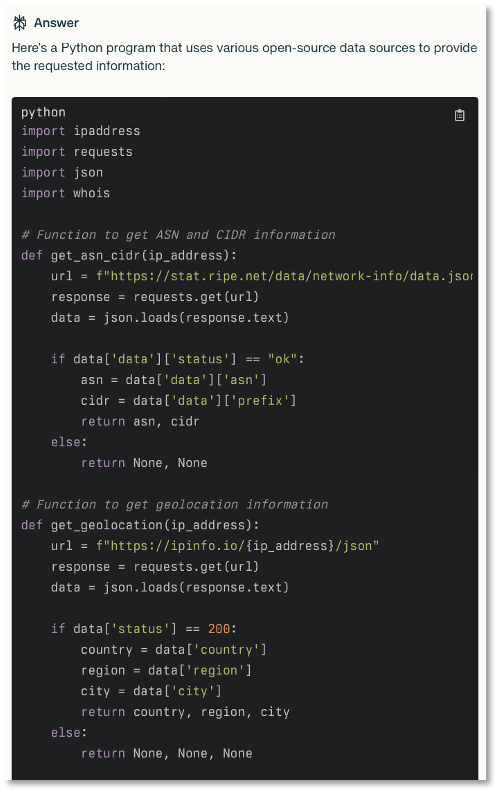

In this use-case (with credit to a developer colleague) we wanted a Python program capable of fetching open-source data for specific network-related information, including ASN, CIDR, geolocation, Whois details, and threat indicators. Here’s the prompt given to the AI:

Please write a python program using open source data that can provide the following information: – ASN – CIDR – Geolocation – Whois – Threat Indicators.

For this task we liked the results from Perplexity.ai as shown in Figure 3. This script is designed to query and retrieve the desired data from specified sources. The script includes functions to fetch ASN and CIDR information using the RIPEstat data API and geolocation details through the IPinfo API. This capability enhances the speed of development and gives developers back more time that they can use to focus on higher-level logic and problem-solving.

Analyst Assistant

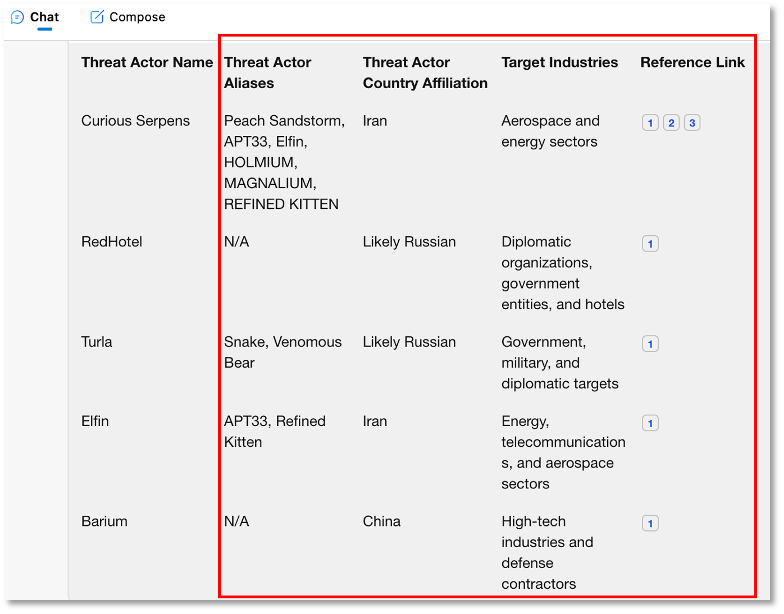

Generating intelligence cards is a common and important task for cyber threat intelligence analysts. In this case the tasking was to generate an intelligence card for five threat actors using the following prompt.

Please provide a summary containing the country affiliation and the target industries for the following Cyber Threat Actors: 1) Curious Serpens, 2) RedHotel, 3) Turla, 4) Elfin, 5) Barium. Please provide output as a table with the four columns: 1) Threat Actor Name, 2) Threat Actor Aliases, 3) Threat Actor Country Affiliation, 4) Target Industries, 5) Reference link(s). Each row will be for a specific Threat Actor, beginning on Row 2.

In Figure 4 we see a good example of a Copilot-generated threat card for multiple threat actors. As shown, the prompt tasked our suite of chatbots with creating summary profiles for five threat actors. The output was structured in a table, with columns for Threat Actor Name, Threat Actor Aliases, Country Affiliation, Target Industries, and Reference Links. The chatbots populated the card with the information in columns 2-5. Resolving threat actor aliases, country attributions, and target profiling are common, time-consuming, and repetitive analyst tasks. GenAI excels at such tasks which are frequently used for intelligence cards and reporting.

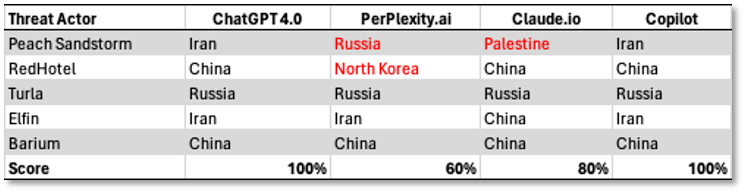

A comparative analysis for how each chatbot performed for this task shows some incorrect or questionable responses from the chatbots in our suite as shown in Table 1. This is a reminder that chatbots tendency to hallucinate (guess) answers makes them unreliable and requires verification mechanisms and analyst oversight. The use of multiple chatbots and reference links is an example of a verification mechanism.

CISO Assistant

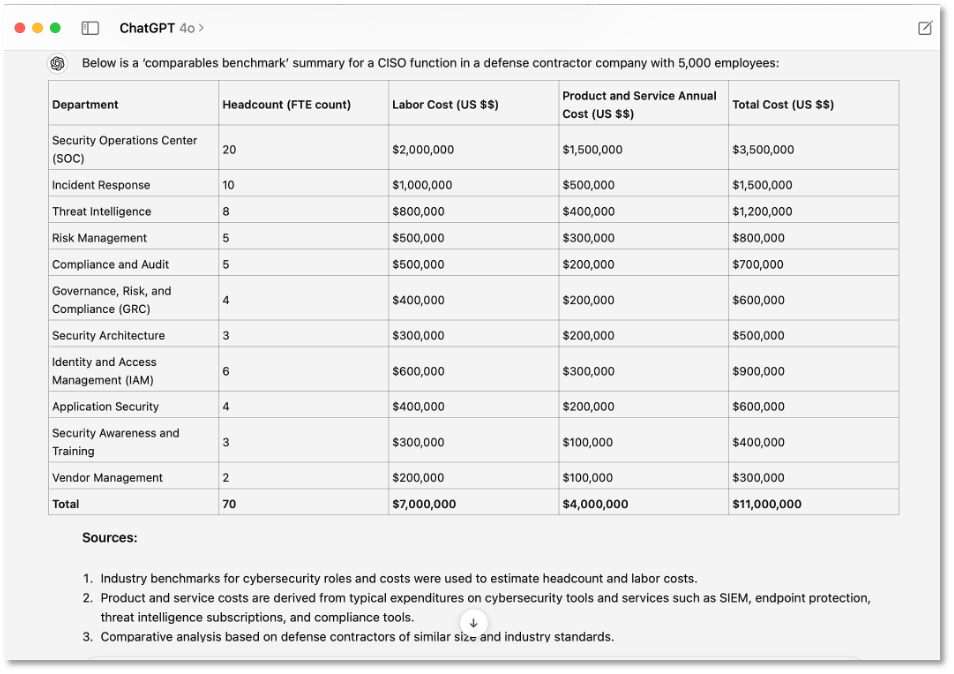

For our final use-case we selected the complex task of budgeting and organizational planning – typical responsibilities for a CISO or their team. The prompt below represents a real-world scenario.

I need your assistance preparing a ‘comparables benchmark’ summary for a prospective client. The business profile ‘comparables’ for this client is: employees – 5,000, industry – defense contractor. The task is to prepare a summary for the CISO (Chief Information Security Officer) function of organizational structure, headcount, budgeting, and vendors. Please provide the summary as a table. Column 1 = Department. Column 2 = Headcount FTE count. Column 3 = labor cost (US $$), Column 4 = Product and Service Annual Cost, Column 5 = Total Cost (column 3 + 4). Each row is for a specific department or function within the CISO organization. Do not include subgroups. The last row should be ‘Total’. The values for this row should be the sum of the cells in the columns.

Figure 5 displays the unverified results generated by GPT-4o. Note the well-structured budget summary for various cybersecurity departments. This is a good illustration of GenAI’s ability to handle complex and detailed requests.

Not shown are the comparative results from the other chatbots showing a wide variance in results. Also not shown are the successive prompt iterations needed to get the essential information we needed, including testing the underlying breakdown details for each major category.

This task demonstrates both the capability of the AI to support complex tasks and the need for human supervision and iterative refinement. With proper oversight, iteration, and verification, AI assistants can generate detailed, actionable financial and organizational reports. One benefit is that GenAI makes it easy to quickly model various scenarios. This integration of AI into high-level executive tasks has the potential to transform how organizations approach planning and resource allocation in cybersecurity.

Outlook

Based on our experience we believe that GenAI should be an essential tool for cybersecurity organizations provided it is designed and implemented with a clear understanding of the risks, effective policy, management, training, and oversight requirements.

Implementing GenAI in cybersecurity takes commitment, as noted by CSO: “In a security environment, you have a high bar for accuracy. Generative AI can generate magical results but also mayhem. … Security operations centers will need strict vetting requirements and put these solutions through their pace before widely deploying them. “And people need to be able to have the judgement to use these tools judiciously and not simply accept the answers that they’re getting,” … there are skills that can only be cultivated over a period of time.”

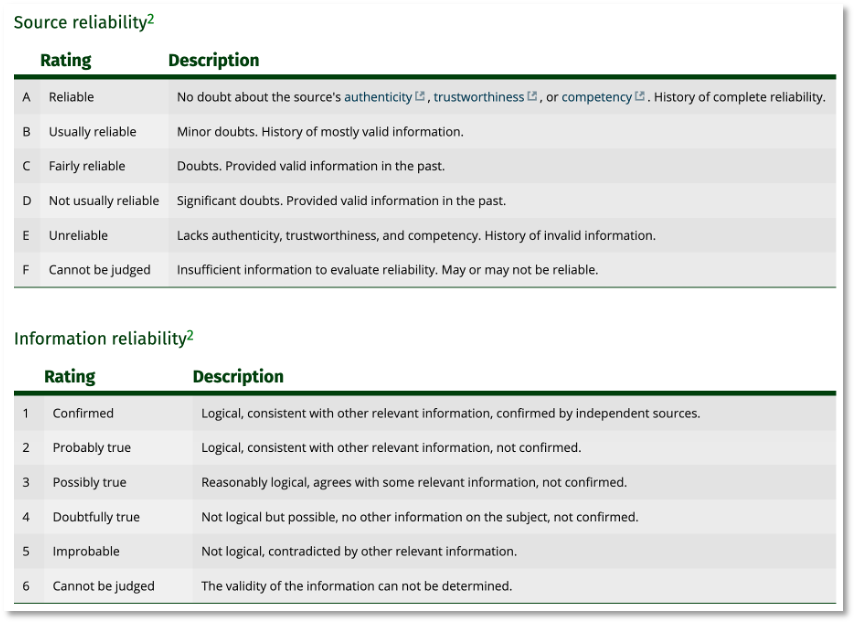

Consider just one example of the ‘high-bar for accuracy’ in analyst reporting. Many ‘finished intelligence reports’ for government or sensitive environments require ‘source and information reliability’ grading based on an accepted and objective standard as shown in Figure 6 and described by FIRST [20]. If we were to assess GenAI’s performance on ‘source and information reliability,’ it would currently receive a low rating. This use case requires human oversight.

Despite these challenges, we are confident that GenAI can be an effective tool for many cybersecurity tasks. We are particularly interested in the application of GenAI to analyst training, cybersecurity awareness training, code analysis, and intelligence reporting. With human error accounting for 74% of all security breaches (Verizon’s 2024 DBIR – Data Breach Investigation Report), personalized cybersecurity for IT and security personnel with privileged access could address a critical area of risk. [20]

We know adversaries are using GenAI for targeting, social engineering, malware development, and to exploit large language models. In this escalating digital arms race, defenders need AI-enabled defenses to counter these threats.

Editor’s Notes:

- Credit to ChatGPT for valuable brainstorming and proofreading assistance.

- Featured image credit for the movie, ‘Her’ poster

References

- The Atlantic – Google and OpenAI Are Battling for AI Supremacy , 15-May-2024

- YouTube – Introducing GPT-4o , 13-May-2024

- YouTube – superapple4ever: Steve Jobs Introducing The iPhone At MacWorld 2007 , 9-Jan-2007

- NYTimes – A.I.’s ‘Her’ Era Has Arrived , 14-May-2024

- NYTimes – Hard Fork Podcast: OpenAI’s Flirty New Assistant, Google Guts the Web and We Play HatGPT , 17-May-2024

- Wired – Project Astra Is Google’s ‘Multi-modal’ Answer to the New ChatGPT , 14-May-2024

- TechCrunch – The top AI announcements from Google I/O , 15-May-2024

- CNET – Lost in the AI Jargon: Google’s Big Event Was Clear as Mud , 14-May-2024

- Wired – Prepare to Get Manipulated by Emotionally Expressive Chatbots , 15-May-2024

- VentureBeat – From sci-fi to reality: The dawn of emotionally intelligent AI , 18-May-2024

- The Verge – We have to stop ignoring AI’s hallucination problem , 15-May-2024

- Fortune – AI will hit the labor market like a ‘tsunami,’ IMF chief warns. ‘We have very little time to get people ready for it’ , 14-May-2024

- Fortune – BlackRock sent its team a memo secretly written by ChatGPT—and one major critique emerged , 16-May-2024

- Yahoo Finance – AI is leading to the ‘revenge of the liberal arts,’ says a Goldman tech exec with a history degree , 17-May-2024

- Axios – Perplexity: Why AI fundraising has become a rat race , 19-May-2024

- NYTimes – Yes, A.I. Can Be Really Dumb. But It’s Still a Good Tutor. , 17-May-2024

- CNBC – Bill Gates: ‘You need to read’ this new book about AI and education , 19-May-2024

- CSO – How gen AI helps entry-level SOC analysts improve their skills , 5-March-2024

- FIRST.org (Forum of Incident Response and Security Teams – Source Evaluation and Information Reliability , 2024

- VentureBeat – Why data breaches have become ‘normalized’ and 6 things CISOs can do to prevent them , 19-May-2024