In this post we review and offer insights on how ‘narrative laundering’ is used in Russian influence operations. This report was inspired by recent research from Clemson University and reporting from The Washington Post. With additional insights from DomainTools and the EU’s EUvsDiSinNFO Database, and findings from our own research, we show the persistence of the Kremlin’s disinformation campaigns, the supporting infrastructure, and their target languages and audiences. We conclude with thoughts on future directions and countermeasures.

Top Insights:

- Evidence of the Kremlin’s disinformation laundering methods and messaging themes reported in March 2023 can still be observed as of 24-Feb-2024

- Much of the domain name and hosting infrastructure for the Kremlin’s disinformation campaigns relies on services from U.S. and U.K. companies.

- Language analysis of Russian disinformation cases indicates that Russia’s top targets are Russian, Russian allies in CIS countries, Italian, French, and Arabic speakers, and suggests defensiveness about how it is perceived.

- With U.S. funding for Ukraine blocked by Congress, the campaign is successfully influencing U.S. opinion and legislation.

About Narrative Laundering

The Clemson Report traces the weaponization of digital disinformation in Russian influence operations in multiple campaigns over fifty years. [1] Narrative laundering is described as a three-stage process of how disinformation is planted and spreads. The goal of these campaigns is to undermine popular opinion in countries that oppose Russia while strengthening Russia’s image with its supporters.

The laundering process begins with the placement of a false or misleading story on an inauthentic social media account. The second stage, layering, expands the story across influential websites, blogs, and legitimate social media, lending a veneer of credibility to facilitate spread. The final stage, integration, sees the narrative adopted by mainstream or reputable sources, imbuing it with the authority needed for widespread dissemination.

The specific subject matter of the claims do not matter. It may be plausible or fringe conspiracy theory. All that matters is that it builds support for Russian interests while harming Russia’s adversaries. These influence operations are asymmetric, incurring low costs for attackers while imposing high costs on defenders.

Clemson Disinformation Campaigns

The Clemson research examines the placement-layer-integrate methods from one primary campaign and twelve supplemental campaigns from 31-Aug to 8-Dec-2023, detailing common themes such as corruption allegations against Zelensky and his wife, accusations of NATO-Ukraine troops as rapists and murderers, and claims of US exploitation of Ukrainian people. Arabic populations are portrayed as victims in two cases.

Each campaign began with the placement of a video, purporting to be eye-witness testimony, on an inauthentic social media account. This initial step, though straightforward, sets the stage for the more challenging task of making the story seem organically widespread through the layering phase. This involves endorsement by more credible media websites, which reference and add more spin to the initial story. These websites reached audiences in the Middle East, Africa, and Russia, with a few focusing on Europe and one on India.

The last stage of the laundering process, integration, is the point where the narratives from the layered sources gain traction. This is enabled by an authentic social media influencer and/or a credible website with followers or audiences predisposed to accept pro-Russian or anti-Western messaging. For all these campaigns, the integration site, dcweekly[.]org, masquerades as a professional, right-leaning news organization from D.C. Despite its facade, its content and editorial team are fabrications, powered by digital technologies, including generative AI. Previously associated with and registered by John Mark Dougan, a former U.S. police officer who fled to Russia, DC Weekly is suspected to be linked to or directly controlled by the Russian government.

Kremlin Disinformation Campaign

The Washington Post reporting on a disinformation campaign directly managed by senior Kremlin operatives, mirrors the narrative laundering playbook described in the Clemson research. Sourcing in the Post report is based on hundreds of Kremlin documents obtained by foreign intelligence services. The analysis describes the operations of a campaign that plants a seed for a core theme – conflict between Zelensky and Ukraine’s Generals – and amplifies it to create discord and weaken Ukrainian and allied resolve.

By March 2023 a troll operation involving dozens of content producers was generating hundreds of articles on websites that were amplified in thousands of social media posts on Telegram, X, and Facebook/Instagram. The articles and posts were translated into French, German and English. In the first week of May, one Facebook post alone had 4.3 million views. The Russian campaigns targeting Ukraine also employed methods used in Doppelgänger operations targeting Europe, such as cloning and impersonating media and government websites, and creating fake social media accounts for officials. [7]

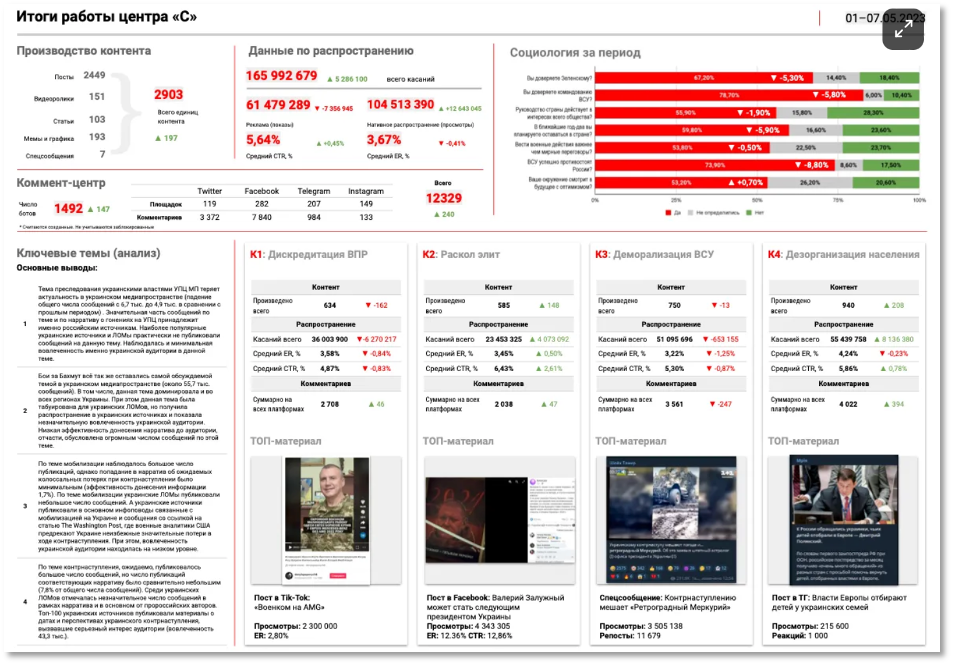

The operation was run by the Kremlin and highly sophisticated. Teams had clear objectives and measurements, used polling to measure and track effectiveness, and were paid up to $39,000 to plant pro-Russian commentary in major Western media outlets. Dashboards with Key Performance Indicators (KPIs) for ‘Content Production’, ‘Distribution Data’, and ‘Sociological Data’ provided real-time tracking of the operation’s success, as shown in Figure 1. [3]

Data Insights

In this section, we present new examples and insights from our investigation into Russian Narrative Laundering and Disinformation Campaigns, utilizing DomainTools, the EUvsDiSiNFO Database, and our own open-source research.

DomainTools: The Clemson report provides 32 website domain names. In order to understand the history, usage and supporting infrastructure of the domains used for integration and layering, we extracted these domains and created an investigation and tag in DomainTools.

Of the 32 total domains, 20 are hosted in the U.S., 6 in Russia, and 6 in other countries. Cloudflare is the leading hosting provider with 14 hosts. We also created two segments – integration websites and layering websites.

The integration website, dc.weekly[.]org, is hosted by Cloudflare AS1335 on a shared public server that resolves to multiple IP Cloudflare IP addresses. Whois records shows a change of registrar occurred on 2-24-2024 from LiquidNet Ltd., a UK company, to Tucows.com, a U.S.-Canadian company. A DomainTools screen-capture of the homepage of dcweekly[.]org as of 2-24-2024 is shown in Figure 2. On the surface the site appears to be a professional, right-leaning, U.S. news site.

![Figure 2. dcweekly[.org] DomainTools screenshot 2-24-2024](https://cybercrank.net/wp-content/uploads/2024/02/Blog-Narrative-Laundering-Figure-2-dc-weekly-org-2-26-2024.png)

We also labeled and analyzed twenty-two layering websites. Most of these were traditional media websites in their respective regions. The locations listed below are indications of Russian targeting priorities. Tailored campaigns and narratives target hot-button issues in each area, with the goal of influencing behavior beneficial to Russia and detrimental to Ukraine and the West.

- Africa 6 (27%)

- Russia 5 (23%)

- Europe 4 (18%)

- Middle East 3 (14%)

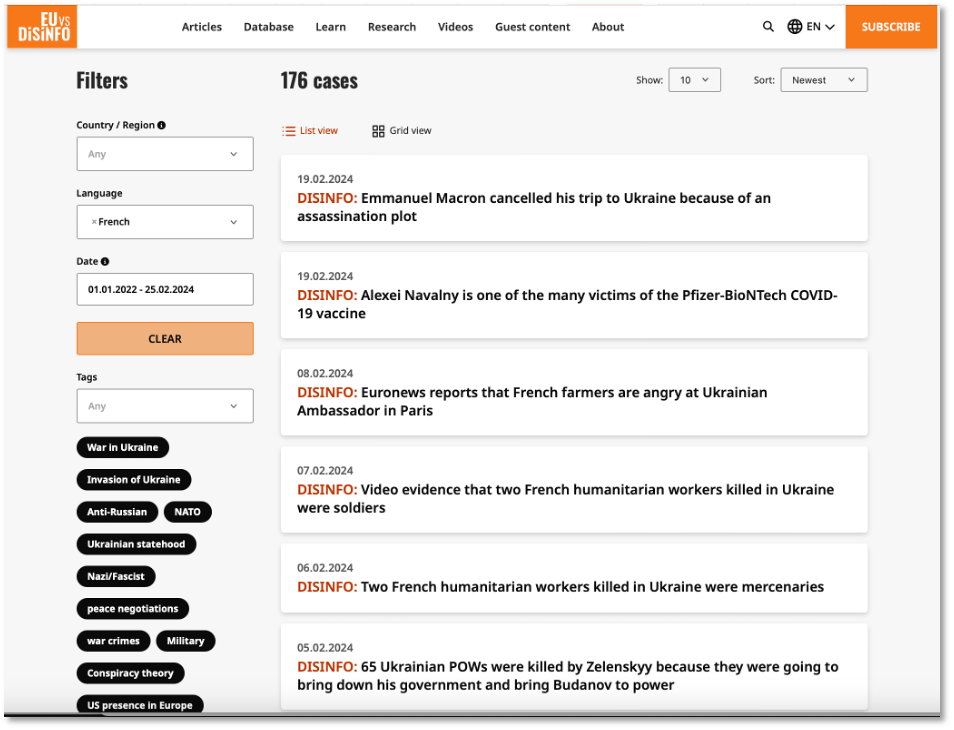

EUvsDiSiNFO Database: The EUvsDiSiNFO Database is a Web open resource developed and maintained by the East Stratcom Task Force, a team of experts from the EU’s Diplomatic Service, with backgrounds in communications, journalism, social sciences and Russian studies. Launched in 2015, the database houses 16,629 cases as of 25-Feb-2024. Each case represents an instance and explanation of Russian disinformation found on media outlets, think tank websites, blogs or government affiliated websites.

The Web version of the database provides a structured source of labeled data that can be filtered by date, language, country or region, tag, or keyword. The backend database provides additional data including the media outlets used in each case. The screenshot in Figure 3 shows an example of a query filtered by date (01.01.2022 – 02.25.2024) and language (French). A quick glance of the recent cases gives a sense of the narratives and their intent to influence the opinions and behaviors of French speakers.

Analyzing the language of disinformation narratives also yields valuable insights. For example, in filtering over 3,000 cases posted after 1-Jan-2022 we found that 46% were either in Russian or the languages of Russian CIS (Commonwealth of Independent States) allies – Armenian, Azerbaijani, Georgian, Kazakh. From this finding we might infer that Russia is concerned and defensive about its standing with its own people and its allies, a potentially valuable insight for planning countermeasures.

Recent Findings of Narrative Laundering: Using basic Google search queries we were able to find current examples on 24-Feb-2024 that directly support the narrative laundering research from Clemson and the Washington Post reporting.

As reported by NewsGuard on 23-Feb-2024, Navalny’s death provided a new source of narratives for conspiracy theorists. [4] One of the websites spreading the conspiracies, The Intel Drop (theinteldrop[.]org) leak site, based in Iceland and hosted by Cloudflare in the U.S., was also cited as a layering site in seven of the campaigns by Clemson researchers. On 24-Feb we found 55 stories on Navalny published in the past week using the Google query, ‘site:theinteldrop.org Navalny’. In Figure 4 we see an example of one of those sites, with two highlighted areas. First, the date of the post is 18-Feb-2024, only two days after his reported death. This is yet another reminder of the velocity of conspiracy theory spread on the Internet. Second, note how the Website sharing features, like fifteen buttons linking to prominent social media sites, accelerate spread. We also note that the use of a leak site is reminiscent of the Russian use of Wikileaks in the 2016 U.S. presidential election.

![Figure 4. theinteldrop[.]org layering website via Google, 2-18-2024](https://cybercrank.net/wp-content/uploads/2024/02/Blog-Narrative-Laundering-Figure-4-the-intel-drop-2-26-2024.png)

Finally, in Figure 5 we see a story from dcweekly[.org] purportedly written by staff writer, Jessica Devlin, on 2-22-2024. The story supports the core narrative theme of ‘Zelensky conflict with Ukrainian military and or people’ that was promoted in the Kremlin’s campaigns described by the Washington Post and in six of the campaigns described in the Clemson report. We also recall from the Clemson report that author, Jessica Devlin, is fictitious. Like nearly all the content on dcweekly[.org], we assume that the story is either spin, fiction, or AI generated.

![Disinformation post on dcweekly[.]org](https://cybercrank.net/wp-content/uploads/2024/02/Blog-Narrative-Laundering-Figure-5-2-26-2024.png)

Directions and Countermeasures

With its special military operation with Ukraine entering its third year, and the opportunity to influence elections in the U.S. and 50 countries, we anticipate that Russia will continue to execute its narrative laundering playbook in its influence operations. Insights from this analysis, such as language preferences and the utilization of U.S. hosting and domain registration services, could inform the development of effective countermeasures. By understanding these tactics, counter-influence campaigns could be more strategically deployed, and actions like the takedown of certain sites and sanctions against enablers could be facilitated. Such measures would increase the operational costs for Russia and mitigate its asymmetric advantage. Solutions like NewsGuard and EUvsDiSiNFO for classifying disinformation based on human review, and AI-based narrative intelligence solutions that can operate with scale like Blackbird.ai, are also needed. [4,5,8]

Notes:

- ChatGPT was used for editorial review. While valuable, its effect on the final product was negligible.

- The source or the image for this blog is EUvsDiSiNFO.

References

- Linvill, Darren and Warren, Patrick, “Infektion’s Evolution: Digital Technologies and Narrative Laundering”. (2023). Media Forensics Hub Reports. 3. https://tigerprints.clemson.edu/mfh_reports/3

- The Washington Post – Kremlin runs disinformation campaign to undermine Zelensky, documents show , 16-Feb-2024

- NewsGuard Substack: Reality Check – Kremlin’s World-Class Dashboard Maximizes Disinformation, at 26 Cents Per Lie , 21-Feb-2024

- NewsGuard Substack: Reality Check – Even in Death: Russian Dissident Navalny Sparks Conspiracy Theory , 23-Feb-2024

- EUvsDiSiNFO Database – https://euvsdisinfo.eu

- Graphika – Secondary Infektion, June 2020.

- Cybercrank.net – Profiling and Countering Russian Doppelgänger Info Ops , 10-Dec-2023

- Blackbird.ai – How Disinformation Campaigns Magnify Cyberattacks: Insights And Strategies For Defense , 22-Feb-2024

- Bluesky Social – Kyle Ehmke